Notebook

This is a personal notebook. See repo here. Let's see how often I can keep this updated...

How to update my note:

https://rust-lang.github.io/mdBook

And take a look at Markdown Help for some hints.

mdbook serve --dest-dir docs --open- Edit content

- Then perhaps build again

mdbook build --dest-dir docs - Then push

Some hidden code here

use warp::Filter; #[tokio::main] async fn main() { let routes = warp::any().map(|| "Hello, World!"); warp::serve(routes).run(([127, 0, 0, 1], 3030)).await; }

How to add some graphs?

https://github.com/badboy/mdbook-mermaid

And see the Dockerfile in the repo how to make an image that can build the site.

About github page build

This github action peaceiris/actions-gh-pages seems really handy. See the config file here

Note 1

Some random notes that could be useful:

How to make http slides:

https://liufuyang.github.io/http-kv/demo.html

ToDo

Courses to study

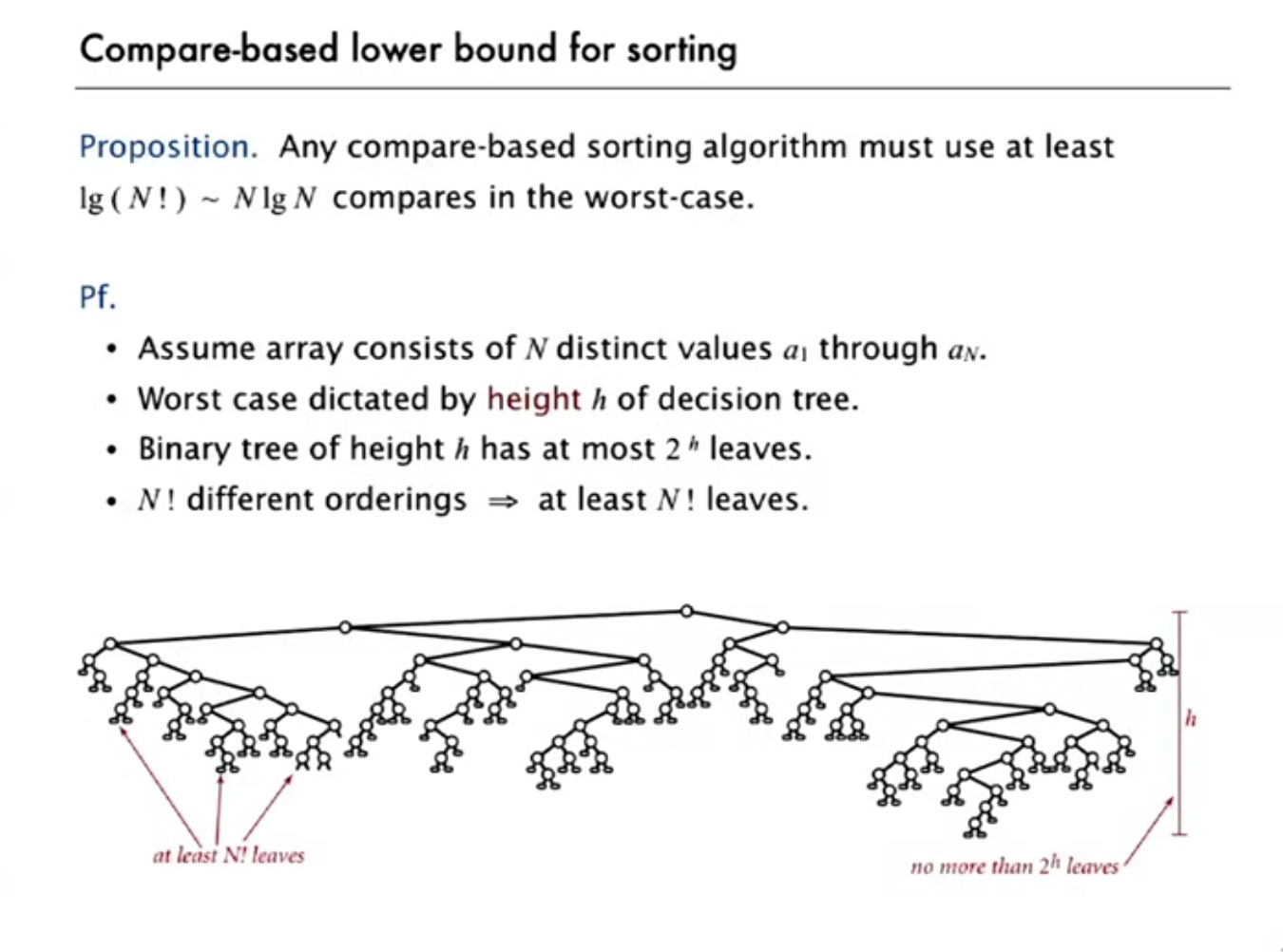

Algorithms

Big O notations

Commonly-used notations in the theory of algorithms

| notation | example | provides | shorthand for | used to |

|---|---|---|---|---|

| Big Theta | \( \Theta (N^2) \) | asymptotic order of growth | \( {1 \over 2} \ N^2 \) \( 10 \ N^2 \) \( 5 N^2 + 2 N \log N + 3 N \) | classify algorithms |

| Big Oh | \( O (N^2) \) | \(\Theta(N^2) \) and smaller | \( 10 \ N^2 \) \( 100 \ N \) \( 2 N \log N + 3 N \) | develop upper bounds |

| Big Omega | \( \Omega (N^2) \) | \( \Theta(N^2) \) and larger | \( {1 \over 2} \ N^2 \) \( N^5 \) \( N^3 + 2 N \log N + 3 N \) | develop lower bounds |

| Tilde * | \( \sim 10 \ N^2 \) | leading term | \( 10 \ N^2 \) \( 10 \ N^2 + 22 N \log N \) \( 10 \ N^2 + 2 N + 37 \) | provide approximate model |

* A common mistake is interpreting Big-Oh as an approximate model Tilde.

Stack and Queue

Two major implementations

- Linked list impl

- Array impl

which can both implement stack and queue.

Linked list impl

For stack or queue, a single direction link list will do,

so only need a head point to the head node, and a tail point to the tail node.

(head) -> (a,) -> (b,) -> (c,)

(tail) - - - - - - - - - - ^

A simple unsafe Rust implementation could use structures like:

#![allow(unused)] fn main() { pub struct Node<T> { element: T, next: Option<Box<Node<T>>>, } pub struct LinkedList<T> { head: Option<Box<Node<T>>>, tail: *mut Node<T>, } }

More code can be see here on my own bad Rust stack/queue implementation

pushadd node viahead;enqueueadd node viatail;popordequeue, just take out nodes fromhead.

Or in short adding on both ends, removing from head only.

Array impl

0 1 2 3 4 5 6 7

[__, __, a, b, c, __, __, __,]

(head) ---^

(tail) - - - - - - - ^

pushorenqueue, just add viatail;popremoves nodes fromtail;dequeueremoves fromhead;

Or in short adding from tail only, removing from both ends.

Other trivial details:

- When reaching the end while adding, increase capacity by factor of 2;

- When

tailless than 1/4 of capacity, can reduce capacity by 1/2 of current capacity, to save space; - In Java, when removing element from the list, besides change

head and tail values, also set

nullto the array so to let those removed nodes can be garbage collected later.

Dequeue - a double-ended queue

Dequeue -a double-ended queue or deque (pronounced “deck”)

is a generalization of a stack and a queue that supports adding and removing items from either the front or the back of the data structure.

One type of implementation could be using a double linked list, so both ends can remove nodes.

Another approach (I suppose) could be using two stacks, with either stack implementations mentioned above.

Then one stack is kept as positive while another kept as

negative.

So the deque can probably be implemented as something like:

// add the item to the front

public void addFirst(Item item) {

negative.push();

}

// add the item to the back

public void addLast(Item item) {

positive.push();

}

// remove and return the item from the front

public Optional<Item> removeFirst() {

if (!negative.isEmpty) {

return negative.pop();

} else {

return positive.dequeue();

}

}

// remove and return the item from the back

public Optional<Item> removeLast() {

if (!positive.isEmpty) {

return positive.pop();

} else {

return negative.dequeue();

}

}

Haven't tried to do my homework with the Princeton course,

but hopefully this works. Otherwise the code might later

be at here.

Update: As the homework requires constant worst time so

I used a double linked list to implement it. So I can

just guess now the above idea will work :)

RandomizedQueue

A RandomizedQueue can be implemented with a normal

array implementation queue, plus using Knuth Shuffle

during enqueue operation:

public void enqueue(Item item) {

if (item == null) {

throw new IllegalArgumentException();

}

if (items.length == tail) {

resize(Math.max(items.length, size() * 2));

}

items[tail] = item;

tail++;

swap(tail - 1, StdRandom.uniform(head, tail));

}

Key is on that swap call. Basically the shuffle

idea is very simple, when adding a new item into

the array, then randomly select an item from the

array (including the newly added one) then swap

the newly added one with the selected item.

See code here.

By the way, this Knuth Shuffle idea seems

pretty powerful as it uses linear time for shuffling

a N-th array. Better than make N random floats and

sort them to have a new index list.

Linked list impl vs Array impl

I guess simply put,

linked listis good for constant time operations in the worst case, though slower each time, an uses more space;resizing arrayis in most cases very fast, occasionally slow when resizing, with the claim of adding an element has constant amortized time cost. And less wasted space

Priority Queues (a.k.a Binary heap)

Besides the "linear" array/linked-list type of queue implementation,

Binary heap can also be used to construct

an array representation of a heap-ordered complete binary tree,

which can be used as a special queue where you can always get the most

min (or max) value of a queue. See more about it in the search algorithm

session below.

And it can also be used as a sorting algoritm called

Heap sort. See more notes about Priority Queues here

Use stack/queue for Search Algorithm

One quite useful thing about stack and queue is that

stackcan be used forDepth-First Search(DFS)queuecan be used forBreadth-First Search(BFS)

And Priority queue can be used for even faster search algoritms to

only focus on the most possible directions (the shortest path for example).

See more notes about Priority Queues here

Quite interesting.

It seems that a general "Graph Search" type of problem can be solved by this abstract framework:

agent- entity that perceives its environment and acts upon that environmentstate- a configuration of the agent and its environment;initial state- the state in which the agent beginsactions- choices that can be made in a state, like edges in graphs;ACTIONS(s)returns the set of actions that can be executed in state stransition modelorRESULT(s, a)returns the state resulting from performing action a in state sgoal test- way to determine whether a given state is a goal statepath cost- numerical cost associated with a given pathfrontier- a stack or queue to keep track of the nodes to be explored

Then with a node defined as

a data structure that keeps track of

- a

state - a

parent(node that generated this node) - an

action(action applied to parent to get node) - a

path cost(from initial state to node)

Then a search algorithm can be defined as:

Search algorithm

- Start with a

frontierthat contains the initial state. - Start with an empty explored set.

- Repeat:

- If the

frontieris empty, then no solution. - Remove a

nodefrom thefrontier. (Also using a set to keep track of visitednodes) - If

nodecontains goal state (goal test), return the solution. - Add the

nodeto the explored set. - Expand

node(ACTIONS + RESULTS), add resultingnodesto thefrontierif they aren't already in thefrontieror the explored set.

- If the

For example, in a simple maze search problem, the state of

the node is just the current position, the actions of the

node is just the next possible directions that the agent can go to, leading to the following states.

In a social network problem, for example in the homework's

movie actors degree problem, the state of

the node is just the actor id, the actions of the

node is just the movies this actor performed, which can lead to other state (or actors).

Some example code on the degree problem might look like this.

def shortest_path(source, target):

"""

Returns the shortest list of (movie_id, person_id) pairs that connect the source to the target.

If no possible path, returns None.

Action: movie id

State: person id

"""

start = Node(state=source, parent=None, action=None)

frontier = QueueFrontier()

frontier.add(start)

explored = set()

while True:

if frontier.empty():

return None

node = frontier.remove()

explored.add(node.state)

for action in people[node.state]["movies"]:

for state in movies[action]["stars"]:

if not frontier.contains_state(state) and state not in explored:

child = Node(state=state, parent=node, action=action)

frontier.add(child)

checkNode = child

if checkNode.state == target:

actions_and_states = []

while checkNode.parent is not None:

actions_and_states.append((checkNode.action, checkNode.state))

checkNode = checkNode.parent

actions_and_states.reverse()

return actions_and_states

And normally a queue is used as the frontier in order

to perform Breadth-First Search - which seem to be the

best option for general problems as you want to find the shortest path to the goal.

One variant of the algorithms is a greedy best-first search

A* search: search algorithm that expands node with lowest value ofg(n) + h(n)g(n)= cost to reach nodeh(n)= estimated cost to goal- optimal if

h(n)is admissible (never overestimates the true cost), andh(n)is consistent (for every node n and successor n' with step cost c,h(n) ≤ h(n') + c)

To facilitate a fast way to pick "a lowest value (or highest) from a queue",

a data structure called Priority Queue

can be used as it as uses binary heap to achieve log N order-of-growth for

both queue insert and queue delete operation.

Another variant of this type of algorithm is Adversarial Search - which is used for problems like Tic-Tac-Toe games

So for games:

- S0 : initial state

- PLAYER(s) : returns which player to move in state s

- ACTIONS(s) : returns legal moves in state s

- RESULT(s, a) : returns state after action a taken in state s

- TERMINAL(s) : checks if state s is a terminal state

- UTILITY(s) : final numerical value for terminal state s

One method is called MinMax

- Given a state s:

- MAX picks action a in ACTIONS(s) that produces highest value of MIN-VALUE(RESULT(s, a))

- MIN picks action a in ACTIONS(s) that produces smallest value of MAX-VALUE(RESULT(s, a))

Or in code as:

function MAX-VALUE(state):

if TERMINAL(state):

return UTILITY(state)

v = -∞

for action in ACTIONS(state):

v = MAX(v, MIN-VALUE(RESULT(state, action)))

return v

function MIN-VALUE(state):

if TERMINAL(state):

return UTILITY(state)

v = ∞

for action in ACTIONS(state):

v = MIN(v, MAX-VALUE(RESULT(state, action)))

return v

And some special pruning method such as Alpha-Beta Pruning is needed for game problems with very large search space.

Divide and Conquer

- Divide into smaller problems

- Conquer via recursive calls

- Combine solutions of sub-problems into one for the original problem.

Typical problems:

-

Merge sort (recursive or bottom up)

-

Karatsuba Multiplication

-

Counting Inversions

Example: (1, 3, 5, 2, 4, 6), having inversions: (3,2), (5,2), (5, 4)

Can be used for: calculate the similarity between 2 persons' 10 movies sort order.

Counting Inversions

Naive implementation is \( O(n^2) \) as we need 2 for loops. Via divide and conquer, what we need is this

Count(array a, length n) {

if n == 1 return 0

else

x = Count(left half of a, n/2)

y = Count(right half of a, n/2)

z = CountSplitInv(a, n)

return x + y + z

}

CountSplitInv counting the split inversions, where first

index is in the first half array, and second index is in the

second half array.

Then the question is, can we do CountSplitInv with

\( O(n) \)? If so, the divide part has \( O(\log n) \), which gives us the final algorithms speed of \( O(n \log n) \). Intuitively feels not possible.

Piggybacking on Merge Sort

SortAndCount(array a, length n) {

if n == 1 return 0

else

b, x = SortAndCount(left half of a, n/2)

c, y = SortAndCount(right half of a, n/2)

d, z = MergeAndCountSplitInv(b, c, n)

return d, x + y + z

}

After sorting, we can do the trick, or "piggybacking" while merge:

i=0

j=0

z=0

for k = 0 .. n-1 {

if b[i] <= c[j] {

d[k] = b[i]

i++

} else {

d[k] = c[j]

j++

z += b.len - i // piggybacking part of merge

}

}

So if b's element all less than c's element,

before j starts to add, i will be as b.len, making z=0 in the end.

Or the general claim:

Claim: the numberr of split inversions involving an element

c_jfrom 2nd arraycis precisely the number of elements left in the 1st arraybwhenc_jis copied to the outputd.

So basically we ended with a very similar thing to Merge Sort, only one more operation on the merge operations. So we achieved \( O(n \log n) \). Pretty impressive.

The Master Method

\[ \begin{align} \text{If } \quad T(n) & <= a T (\frac{n}{b}) + O(n^d) \\ \text{Then } \quad T(n) & = \begin{cases} O(n^d \log n) & \quad \text{if } a = b^d \text{ (Case 1)} \\ O(n^d) & \quad \text{if } a < b^d \text{ (Case 2)} \\ O(n^{\log_{b}{a} }) & \quad \text{if } a > b^d \text{ (Case 3)} \end{cases} \end{align} \]

For example

- merge sort having

a=2, b=2, d=1so it is \( O(n \log n) \), which is case 1 - binary search having

a=1, b=2, d=0so it is \( O(\log n) \), which is case 1 - Karatsuba Multiplication

a=3, b=2, d=1so it is \( O(n^{\log_2 3}) = O(n^{1.59}) \), which is case 3

A simple proof could be: At each level \( j=0,1,2,..,log_b(n) \), there are \(a^j\) subproblems, each of size \( n \over b^j \). Then the total number of operations needed to solve the whole problem for each level is: \[ a^j C {\left[\frac{n}{b^j}\right]}^d = C n^d {\left[\frac{a}{b^d}\right]}^j \] Thus the total number of the whole problem is: \[ \text{total work} \leq \displaystyle\sum_{j=0}^{\log_b n} C n^d {\left[\frac{a}{b^d}\right]}^j \] where

- \( a \): rate of subproblem proliferation (

RSP) - \( b^d \): rate of work shrinkage per subproblem (

RWS)

And we see:

- If

RSP < RWS, then the amount of work is decreasing with the recursion levelj - If

RSP > RWS, then the amount of work is increasing with the recursion levelj - If

RSP = RWS, then the amount of work is the same at every recursion levelj

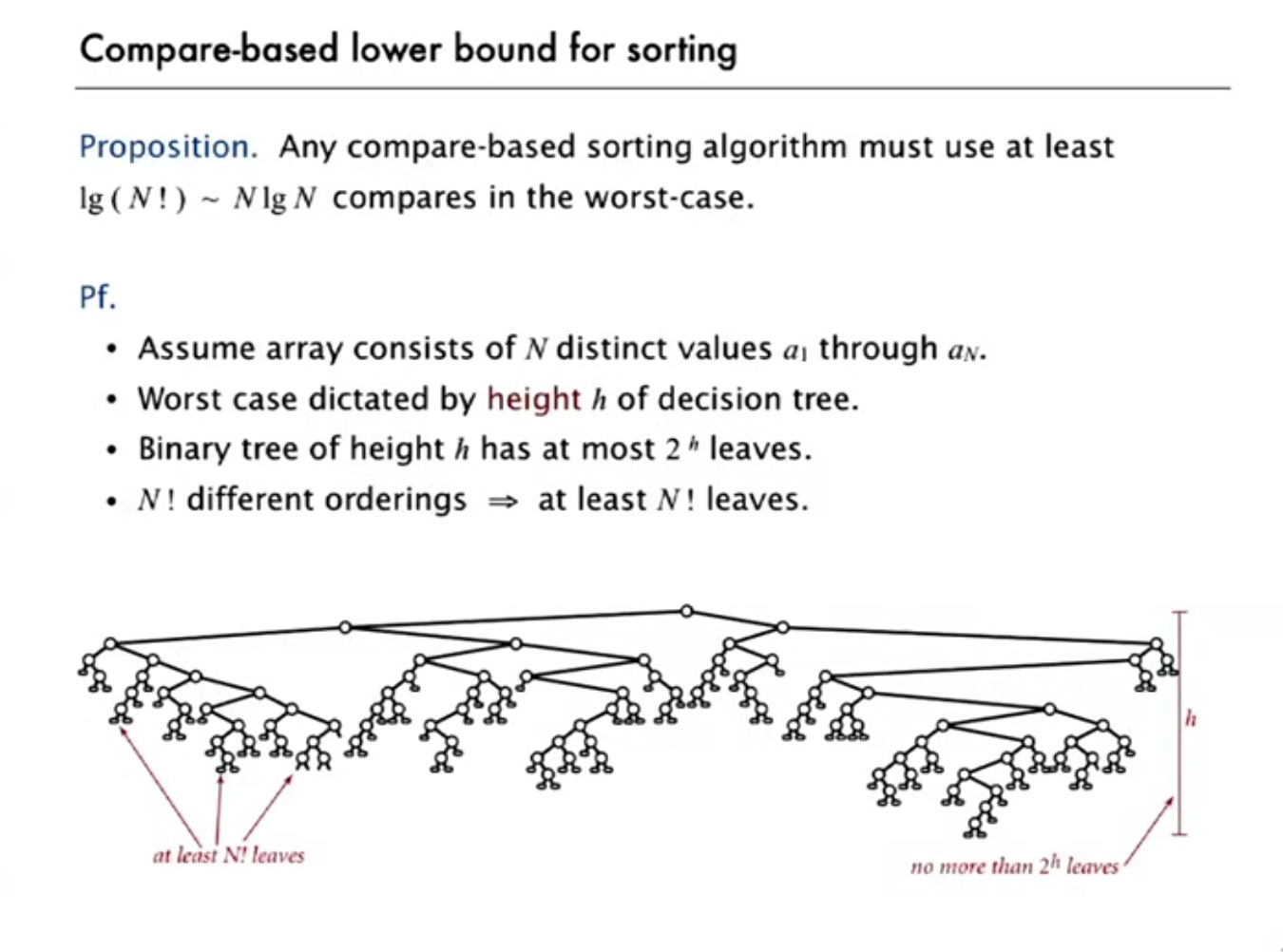

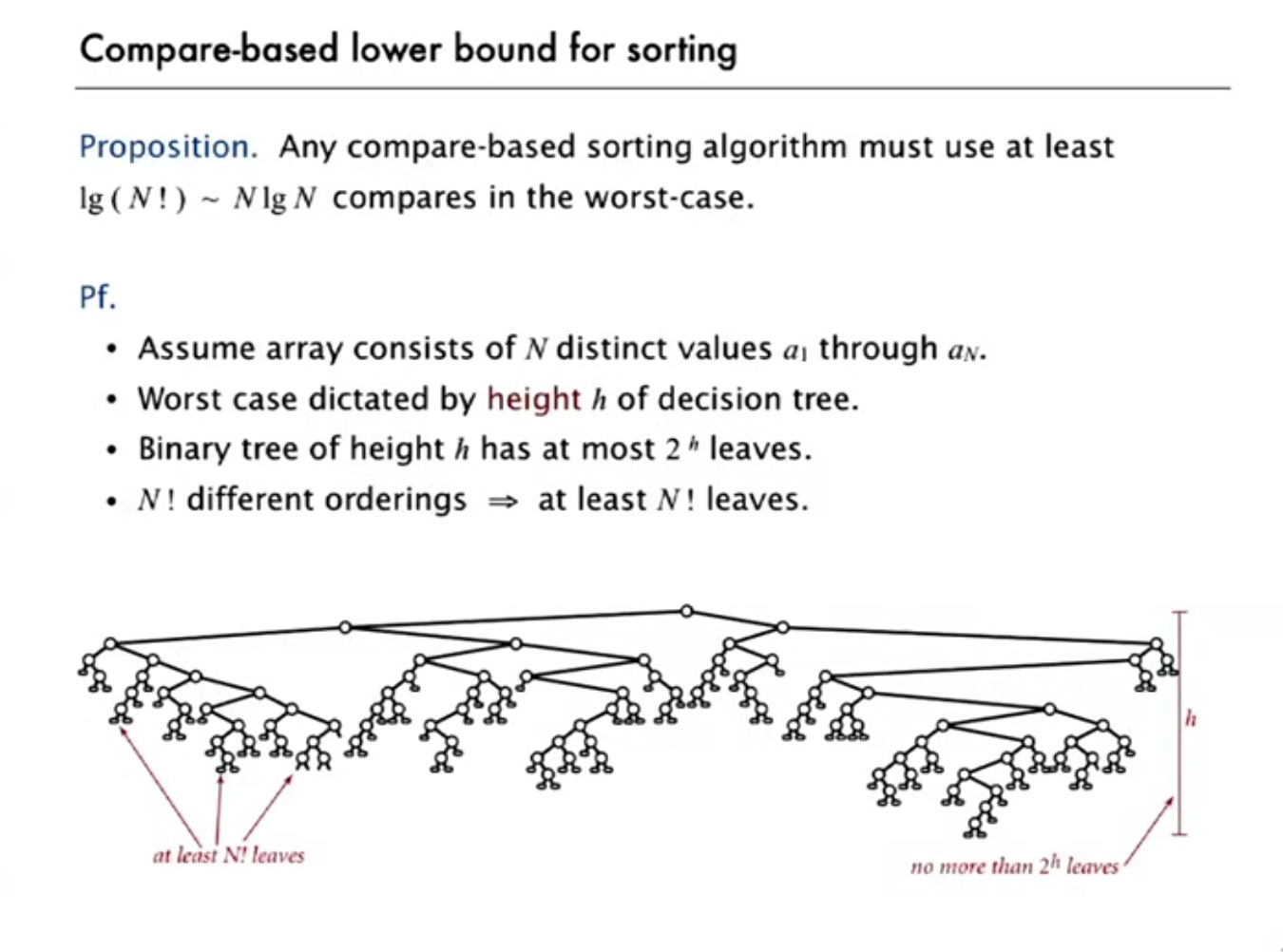

Sort

Merge sort

- \( n \log(n) \) in worst case

- a

stablesort (sort by column A, then sort by column B, then for the same B, order of A preserves) - simple to understand, divide and conquer (or bottom up to avoid recursion);

log(n)levels, and each level's merge takesnoperation, thus \( n \log(n) \) will do

Quick sort

- \( n \log(n) \) in worst case

- not a

stablesort - more tricky but not difficult to understand, divide and conquer:

make sure an element's left are all smaller (or equal to) it, and the right part are all bigger than it (let's called itpartitioned); then continue to sort both on left and right part;

Quick sort partition

To make an array partitioned by, for eg. the first element:

[3, 5, 2, 6, 1, 4]

i---^

j---^

Start with i and j both after first element.

i stands for left of me is smaller than pivot; j stands for left of me is partitioned.

So basically we loop each element until j is n;

For each step:

- If

a[j] >= pivot, then justj++; - If

a[j] < pivot, thenswap(i, j),i++, j++; - When

jreaches the end,swap(i-1, 0), returni-1

[3, 5, 2, 6, 1, 4]

i---^

j------^

swap

[3, 2, 5, 6, 1, 4]

i------^

j---------^

[3, 2, 5, 6, 1, 4]

i------^

j------------^

swap

[3, 2, 1, 6, 5, 4]

i---------^

j------------^

[3, 2, 1, 6, 5, 4]

i---------^

j-----------------^

then last swap and return i=2

[1, 2, 3, 6, 5, 4]

i---------^

j-----------------^

Quick sort pivot choose

If an input array is already sorted, simply choose pivod as the first element of the array will result the running time of quick sort to be \( n^2 \). That is not quick.

So if some "maigc" method out there can always choose the midiean value as the pivot, then the quick sort running back falls back to \( n \log(n) \).

A common way is to choose a random element as pivot. So with Random Pivot implemented, it can be approved that the total number of comparisons of quik-sort algorithms is equal or less than \( 2 n \ln(n) \), appriximately.

So quick sort will have more comparisions than merge sort, but as it needs no extra memory, so it is generally faster than merge sort.

Quick sort partition can be tricky

Many textbook implementations go quadratic if array

- Is sorted or reverse sorted

- Has many duplicates (even if randomized) (the standford course impl perhaps?)

Quick sort practical improvements

- Median of sample

- Best choice of pivot item = median

- Estimate true median by taking median of sample

- Median-of-3 (random) items (which is also used in Rust's quicksort code)

Quick select

The expected running time to find the median (or the kth largest value) of an array of nn distinct keys using randomized quickselect is linear.

Duplicated keys - 3-way partition

Simply put all equal values together

- 3-way partitioning: Goal - partition array into 3 parts

- lo, lt, gt, hi

- start with

lt=lo+1, gt=lo+1 - end with

higoes out length range

For each step:

- If

a[hi] > pivot, then justhi++; - If

a[hi] == pivot, thenswap(gt, hi),gt++, hi++ - If

a[hi] < pivot, thenswap(gt, hi),swap(lt, gt),lt++, gt++, hi++; - When

hireaches the end,swap(lt-1, 0), returnlt-1, gt

[3, 2, 1, 3, 3, 5, 6, 3, 1]

lt--------^

gt--------------^

hi-----------------^

[3, 2, 1, 3, 3, 5, 6, 3, 1]

lt--------^

gt--------------^

hi--------------------^

[3, 2, 1, 3, 3, 3, 6, 5, 1]

lt--------^

gt-----------------^

hi--------------------^

[3, 2, 1, 3, 3, 3, 6, 5, 1]

lt--------^

gt-----------------^

hi-----------------------^

swap(gt, hi)

[3, 2, 1, 3, 3, 3, 1, 5, 6]

lt--------^

gt-----------------^

hi-----------------------^

swap(lt, gt)

[3, 2, 1, 1, 3, 3, 3, 5, 6]

lt--------^

gt-----------------^

hi-----------------------^

lt++, gt++, hi++

[3, 2, 1, 1, 3, 3, 3, 5, 6]

lt-----------^

gt--------------------^

hi--------------------------^

Or a "from two end" approach could be like this:

Bottom line

Randomized quicksort with 3-way partitioning reduced running time from linearithmic to liner in broad class of applications.

Java Implementation

Arrays.sort() in Java use mergesort instead of quicksort when sorting reference types, as it is stable and guarantees \( n \log(n) \) performance

Tukey's ninther

Median of the median of 3 samples. Approximates the median of 9 evenly spaced entries. Uses at most 12 compares.

Seems used in Rust's quicksort as well.

Summary

Priority Queue - Binary Heap and Heap Sort

Priority Queue is super useful for fast Breadth-First Search (BFS) in algorithms like A* search, or it can be used in sort algorithm called Heap Sort (basically you make a Priority queue then pop out elements and they will be in order)

It needs to implement two operations efficiently:

- remove the maximum

- insert

Priority queue applications

- Event-driven simulation. [customers in a line, colliding particles]

- Numerical computation. [reducing roundoff error]

- Data compression. [Huffman codes]

- Graph searching. [Dijkstra's algorithm, Prim's algorithm]

- Number theory. [sum of powers]

- Artificial intelligence. [A* search]

- Statistics. [maintain largest M values in a sequence]

- Operating systems. [load balancing, interrupt handling]

- Discrete optimization. [bin packing, scheduling]

- Spam filtering. [Bayesian spam filter]

Priority Queue can be implemented via binary heap - which is an array representation of a heap-ordered complete binary tree.

- Largest key is

a[1], which is root of binary tree. - Can use array indices to move through tree.

- Parent of node at

kis atk/2. - Children of node at

kare at2kand2k+1.

- Parent of node at

Binary Heap

The binary heap is a data structure that can efficiently support the basic priority-queue operations. A binary tree is heap-ordered if the key in each node is larger than or equal to the keys in that node's two children (if any).

- Height of complete tree with N nodes is

lg N - Array representation, with indices start at 1, largest key at the root

- Take nodes in level order

- No explicit links needed!

- Largest key is a[1], which is root of binary tree

- Can use array indices to move through tree

- Parent of node at k is at

k/2 - Children of node at k are at

2kand2k+1

- Parent of node at k is at

Here is the code summary:

public class MaxPQ<Key extends Comparable<Key>>

{

private Key[] pq;

private int N;

public MaxPQ(int capacity)

{ pq = (Key[]) new Comparable[capacity+1]; }

public boolean isEmpty()

{ return N == 0; }

private boolean less(int i, int j)

{ return pq[i].compareTo(pq[j]) < 0; }

private void exch(int i, int j)

{ Key t = pq[i]; pq[i] = pq[j]; pq[j] = t; }

// promotion

private void swim(int k)

{

while (k > 1 && less(k/2, k)) {

exch(k, k/2);

k = k/2;

}

}

// insert

public void insert(Key x) {

pq[++N] = x;

swim(N);

}

// demotion

private void sink(int k) {

while (2*k <= N) {

int j = 2*k;

if (j < N && less(j, j+1)) j++;

if (!less(k, j)) break;

exch(k, j);

k = j;

}

}

// delete max

public Key delMax() {

Key max = pq[1];

exch(1, N--);

sink(1);

pq[N+1] = null; // prevent loitering

return max;

}

}

One more note: Use immutable keys if you can!

Heapsort

public static void sort(Comparable[] a) {

int N = a.length;

// first pass - heap construction

for (int k = N/2; k >= 1; k--)

sink(a, k, N);

// second pass - remove max one at a time

while (N > 1) {

exch(a, 1, N);

sink(a, 1, --N);

}

}

- Heap construction is in linear time, uses ≤ 2 N compares and exchanges

- No extra memory needed, ≤ 2 N log N compares and exchanges !!!

Heapsort significane In-place sorting algorithm

- Mergesort: need extra space, (otherwise there is a not practical way)

- Quicksort: quadratic time in worst case (N ln N via probabilistic guarantee, fast in practise)

- Heapsort: Yes ! 2 N log N worst case

Bottom line. Heapsort is optimal for both time and space, but:

- Inner loop longer than quicksort’s. (each time sink needs to compare with 2 children)

- Makes poor use of cache memory. (memory ref jumps around too much)

- Not stable.

Dynamic programming

Fibonacci

Recursion - Exponential waste

A novice programmer might implement a recersion like this below. But it has a problem called expoential waste.

public class FibonacciR

{

public static long F(int n)

{

if (n == 0) return 0;

if (n == 1) return 1;

return F(n-1) + F(n-2);

}

public static void main(String[] args)

{

int n = Integer.parseInt(args[0]);

StdOut.println(F(n));

}

}

The issue of the recursion above is that there are many duplicated calculations performed. So the core of the algorithms of opertimization is to reduced the number of operations. And since we have many calculation already done so there should be a way to save the results, then later calculations can use the previous calculatined values.

This could be the core idea of Dynamic programming.

Avoiding exponential waste

Memoization

- Maintain an array

memo[]to remember all computed values. - If value known, just return it.

- Otherwise, compute it, remember it, and then return it.

public class FibonacciM

{

static long[] memo = new long[100];

public static long F(int n)

{

if (n == 0) return 0;

if (n == 1) return 1;

if (memo[n] == 0)

memo[n] = F(n-1) + F(n-2);

return memo[n];

}

public static void main(String[] args)

{

int n = Integer.parseInt(args[0]);

StdOut.println(F(n));

}

}

Dynamic programming

Dynamic programming.

- Build computation from the "bottom up".

- Solve small subproblems and save solutions.

- Use those solutions to build bigger solutions.

public class Fibonacci

{

public static void main(String[] args)

{

int n = Integer.parseInt(args[0]);

long[] F = new long[n+1];

F[0] = 0; F[1] = 1;

for (int i = 2; i <= n; i++)

F[i] = F[i-1] + F[i-2];

StdOut.println(F[n]);

}

}

DP example: Longest common subsequence (LCS)

LCS length implementation

public class LCS

{

public static void main(String[] args)

{

String s = args[0];

String t = args[1];

int M = s.length();

int N = t.length();

int[][] opt = new int[M+1][N+1];

for (int i = M-1; i >= 0; i--)

for (int j = N-1; j >= 0; j--)

if (s.charAt(i) == t.charAt(j))

opt[i][j] = opt[i+1][j+1] + 1;

else

opt[i][j] = Math.max(opt[i+1][j], opt[i][j+1]);

System.out.println(opt[0][0]);

}

}

More notes will come when the alg course touches on dynamic programming later. The above content are from the Princeton's course Computer Science: Programming with a Purpose.

A * Search homework

Write a program to solve the 8-puzzle problem (and its natural generalizations) using the A* search algorithm.

The problem

The 8-puzzle is a sliding puzzle that is played on a 3-by-3 grid with 8 square tiles labeled 1 through 8, plus a blank square. The goal is to rearrange the tiles so that they are in row-major order, using as few moves as possible. You are permitted to slide tiles either horizontally or vertically into the blank square. The following diagram shows a sequence of moves from an initial board (left) to the goal board (right).

Board data type

To begin, create a data type that models an n-by-n board with sliding tiles. Implement an immutable data type Board with the following API:

public class Board {

// create a board from an n-by-n array of tiles,

// where tiles[row][col] = tile at (row, col)

public Board(int[][] tiles)

// string representation of this board

public String toString()

// board dimension n

public int dimension()

// number of tiles out of place

public int hamming()

// sum of Manhattan distances between tiles and goal

public int manhattan()

// is this board the goal board?

public boolean isGoal()

// does this board equal y?

public boolean equals(Object y)

// all neighboring boards

public Iterable<Board> neighbors()

// a board that is obtained by exchanging any pair of tiles

public Board twin()

// unit testing (not graded)

public static void main(String[] args)

}

Constructor. You may assume that the constructor receives an n-by-n array containing the \( n^2 \) integers between 0 and \(n^2 − 1\), where 0 represents the blank square. You may also assume that 2 ≤ n < 128.

String representation. The toString() method returns a string composed of n + 1 lines. The first line contains the board size n; the remaining n lines contains the n-by-n grid of tiles in row-major order, using 0 to designate the blank square.

Hamming and Manhattan distances. To measure how close a board is to the goal board, we define two notions of distance.

The Hamming distance betweeen a board and the goal board is the number of tiles in the wrong position.

The Manhattan distance between a board and the goal board is the sum of the Manhattan distances (sum of the vertical and horizontal distance) from the tiles to their goal positions.

Comparing two boards for equality. Two boards are equal if they are have the same size and their corresponding tiles are in the same positions.

The equals() method is inherited from java.lang.Object, so it must obey all of Java’s requirements.

Neighboring boards. The neighbors() method returns an iterable containing the neighbors of the board. Depending on the location of the blank square, a board can have 2, 3, or 4 neighbors.

Unit testing. Your main() method should call each public method directly and help verify that they works as prescribed (e.g., by printing results to standard output).

Performance requirements. Your implementation should support all Board methods in time proportional to n2 (or better) in the worst case.

A* search. Now, we describe a solution to the 8-puzzle problem that illustrates a general artificial intelligence methodology known as the A* search algorithm.

We define a search node(or called as state in other context) of the game to be a board, the number of moves made to reach the board, and the previous search node. First, insert the initial search node (the initial board, 0 moves, and a null previous search node) into a priority queue. Then, delete from the priority queue the search node with the minimum priority, and insert onto the priority queue all neighboring search nodes (those that can be reached in one move from the dequeued search node). Repeat this procedure until the search node dequeued corresponds to the goal board.

The efficacy of this approach hinges on the choice of priority function for a search node. We consider two priority functions:

- The Hamming priority function is the Hamming distance of a board plus the number of moves made so far to get to the search node. Intuitively, a search node with a small number of tiles in the wrong position is close to the goal, and we prefer a search node if has been reached using a small number of moves.

- The Manhattan priority function is the Manhattan distance of a board plus the number of moves made so far to get to the search node.

To solve the puzzle from a given search node on the priority queue, the total number of moves we need to make (including those already made) is at least its priority, using either the Hamming or Manhattan priority function. Why? Consequently, when the goal board is dequeued, we have discovered not only a sequence of moves from the initial board to the goal board, but one that makes the fewest moves. (Challenge for the mathematically inclined: prove this fact.)

Game tree. One way to view the computation is as a game tree, where each search node is a node in the game tree and the children of a node correspond to its neighboring search nodes. The root of the game tree is the initial search node; the internal nodes have already been processed; the leaf nodes are maintained in a priority queue; at each step, the A* algorithm removes the node with the smallest priority from the priority queue and processes it (by adding its children to both the game tree and the priority queue).

For example, the following diagram illustrates the game tree after each of the first three steps of running the A* search algorithm on a 3-by-3 puzzle using the Manhattan priority function.

Solver data type. In this part, you will implement A* search to solve n-by-n slider puzzles. Create an immutable data type Solver with the following API:

public class Solver {

// find a solution to the initial board (using the A* algorithm)

public Solver(Board initial)

// is the initial board solvable? (see below)

public boolean isSolvable()

// min number of moves to solve initial board; -1 if unsolvable

public int moves()

// sequence of boards in a shortest solution; null if unsolvable

public Iterable<Board> solution()

// test client (see below)

public static void main(String[] args)

}

Implementation requirement. To implement the A* algorithm, you must use the MinPQ data type for the priority queue.

Corner cases.

- Throw an IllegalArgumentException in the constructor if the argument is null.

- Return -1 in moves() if the board is unsolvable.

- Return null in solution() if the board is unsolvable.

Test client. The following test client takes the name of an input file as a command-line argument and prints the minimum number of moves to solve the puzzle and a corresponding solution.

public static void main(String[] args) {

// create initial board from file

In in = new In(args[0]);

int n = in.readInt();

int[][] tiles = new int[n][n];

for (int i = 0; i < n; i++)

for (int j = 0; j < n; j++)

tiles[i][j] = in.readInt();

Board initial = new Board(tiles);

// solve the puzzle

Solver solver = new Solver(initial);

// print solution to standard output

if (!solver.isSolvable())

StdOut.println("No solution possible");

else {

StdOut.println("Minimum number of moves = " + solver.moves());

for (Board board : solver.solution())

StdOut.println(board);

}

}

The input file contains the board size n, followed by the n-by-n grid of tiles, using 0 to designate the blank square.

Two optimizations. To speed up your solver, implement the following two optimizations:

- The critical optimization. A* search has one annoying feature: search nodes corresponding to the same board are enqueued on the priority queue many times (e.g., the bottom-left search node in the game-tree diagram above). To reduce unnecessary exploration of useless search nodes, when considering the neighbors of a search node, don’t enqueue a neighbor if its board is the same as the board of the previous search node in the game tree.

- Caching the Hamming and Manhattan priorities. To avoid recomputing the Manhattan priority of a search node from scratch each time during various priority queue operations, pre-compute its value when you construct the search node; save it in an instance variable; and return the saved value as needed. This caching technique is broadly applicable: consider using it in any situation where you are recomputing the same quantity many times and for which computing that quantity is a bottleneck operation.

Detecting unsolvable boards. Not all initial boards can lead to the goal board by a sequence of moves, including these two:

To detect such situations, use the fact that boards are divided into two equivalence classes with respect to reachability:

- Those that can lead to the goal board

- Those that can lead to the goal board if we modify the initial board by swapping any pair of tiles (the blank square is not a tile)

(Difficult challenge for the mathematically inclined: prove this fact.) To apply the fact, run the A* algorithm on two puzzle instances—one with the initial board and one with the initial board modified by swapping a pair of tiles—in lockstep (alternating back and forth between exploring search nodes in each of the two game trees). Exactly one of the two will lead to the goal board.

Summary

I have some sample solution here for this exercises.

The most important hints could be:

- You would need to implement a linked list type of structure to keep all the visited states and link them to the current state so to allow it to be traced back in the end.

- For that critical optimizaiton part where making sure we don't insert a visitedstate into the frontier again. I initially tried to put all the visited state in a large SET and each time check the common set whether it contains the state before inserting but that apparently is not always correct (and it is slow). One only need to check all the state in it's state chain to know whether it is a state can be inserted to frontier. Also as we are not dealing some type of circled graphs so to speed up we only needed to check the state (one of the neighbor) a single time with current state's previous state.

Binary search tree (BST)

Key-value pair abstraction - Map/Dictionary

- key -> value

- insert

- search/get

- delete

Binary search tree (BST)

- Each node has a key

- For every key:

- Larger than all keys in its left subtree

- Smaller than all keys in its right subtree

So with BST the drawback includes:

- No good guarantee that if insertion has order, the tree will be imbalanced. (The red black tree can help)

- when deleting: the order of growth of the expected height of a binary search tree with n keys after a long intermixed sequence of random insertions and random Hibbard deletions is:

sqrt(N). This currently is not optimized tolog Nand it is an open question.

Notes on delete

Balanced Search Trees

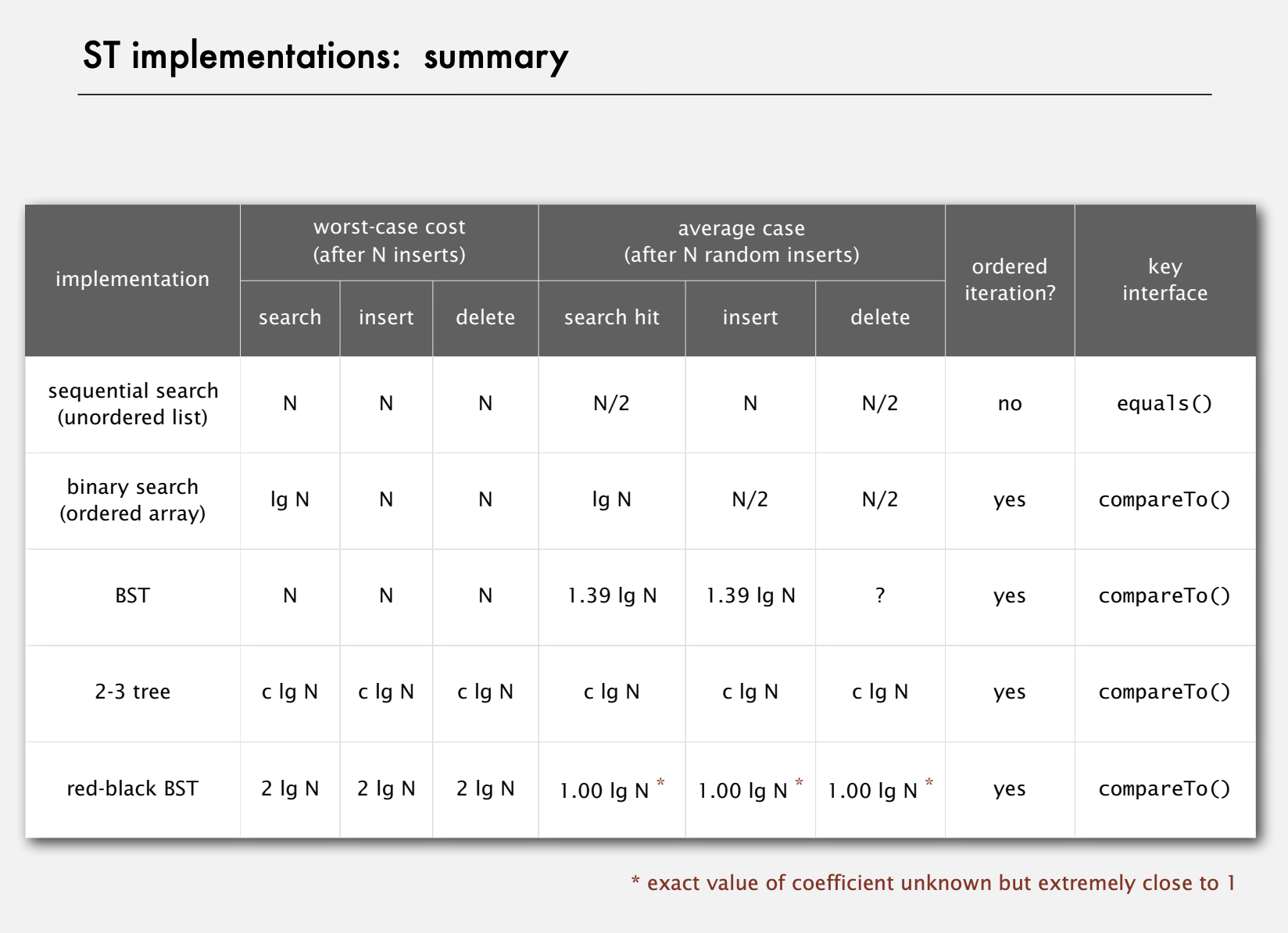

Binary Heapor priority queue is good formax()ormin()as it is only O(1), however it only supportdelMax/Min()and not good for search.Binary search treeis good for search, rank, insert and delete, also quite good for finding max and min, all these operation is O(log N), if the tree can be balanced.Hash Tableis super good for search/look-up (O(1)), insert and delete, but not good for operations such as rank, min, or max.

Red-Black BSTs

Link to course presentations.

BTS - binary search tree is a very good data structure

that has fast insert and search property. One key point

in making a BST to work well for any cases is to keep

the tree balanced, or to keep the height of tree as

low as possible. This is achieved via algorithms such

as 2-3 tree and Red-Black tree (which is a different

representation of 2-3 tree).

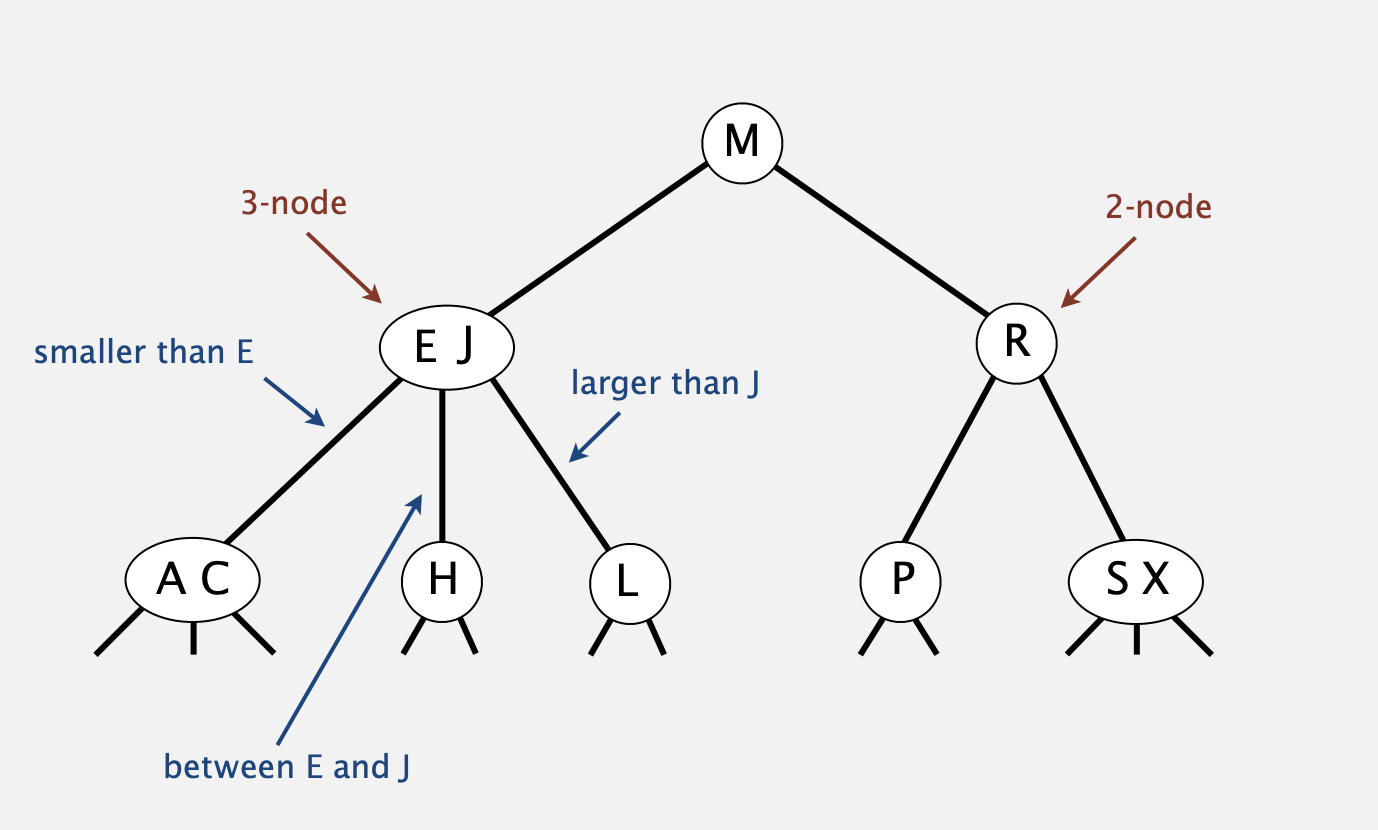

2-3 tree

Allow 1 or 2 keys per node.

- 2-node: one key, two children.

- 3-node: two keys, three children.

- 4-node: a special temporary condition, 4 children, only exist for a short time while inserting

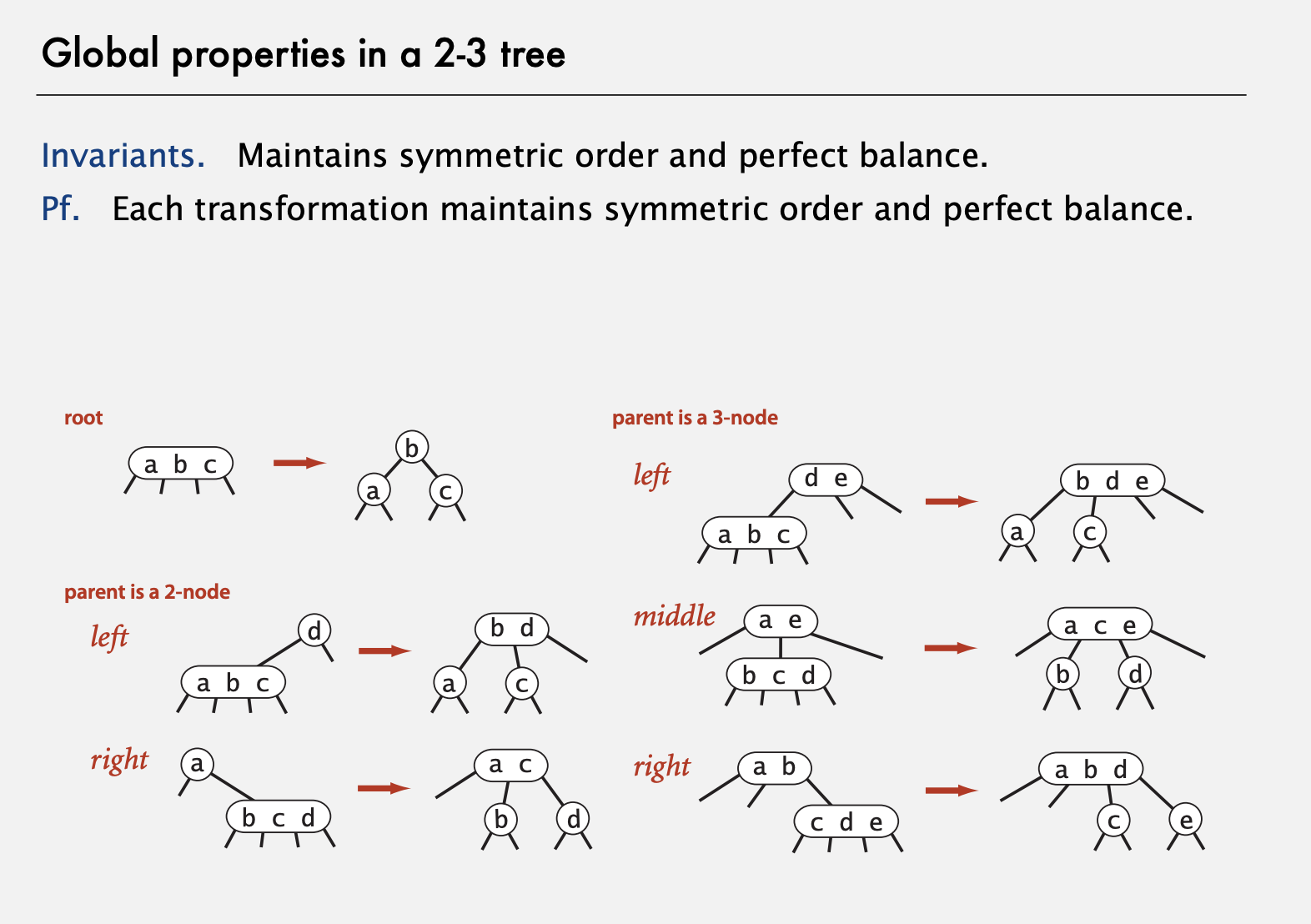

Guaranteed logarithmic performance for search and insert, worst case: \(height \leq c log_{2}(n) \)

Insertion into a 3-node at bottom.

- Add new key to 3-node to create temporary 4-node.

- Move middle key in 4-node into parent.

- Repeat up the tree, as necessary.

- If you reach the root and it's a 4-node, split it into three 2-nodes.

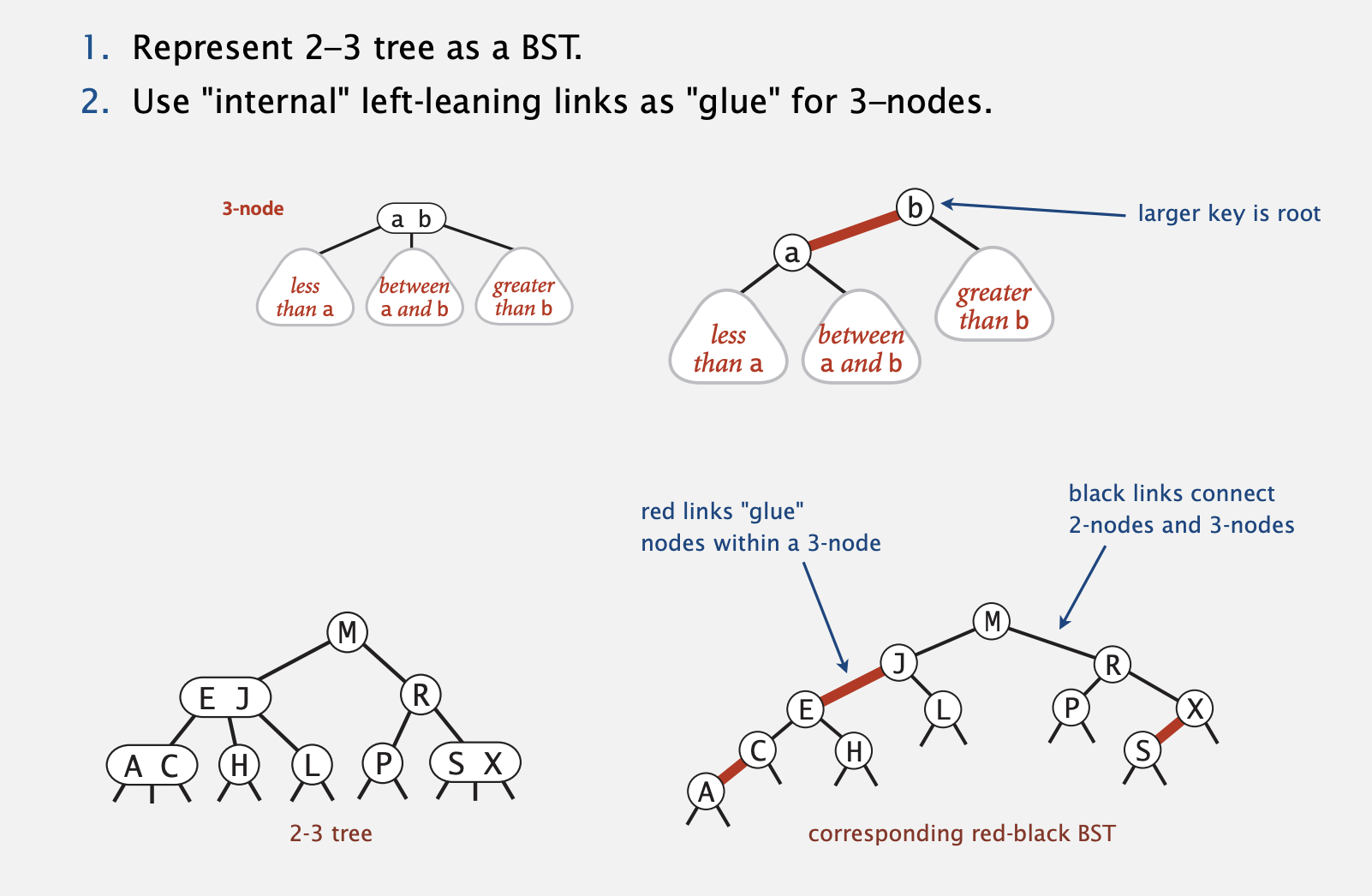

Left-leaning red-black BSTs (Guibas-Sedgewick 1979 and Sedgewick 2007)

Idea: ensure that hight always O(log N) (best possible)

A Red-Black BST that has rules:

- each node

redorblack - root is always black

- no red nodes in a row

- red node => only black children

- every root-Null path (like in an unsuccessful search) has the same number of black nodes

- red links lean left

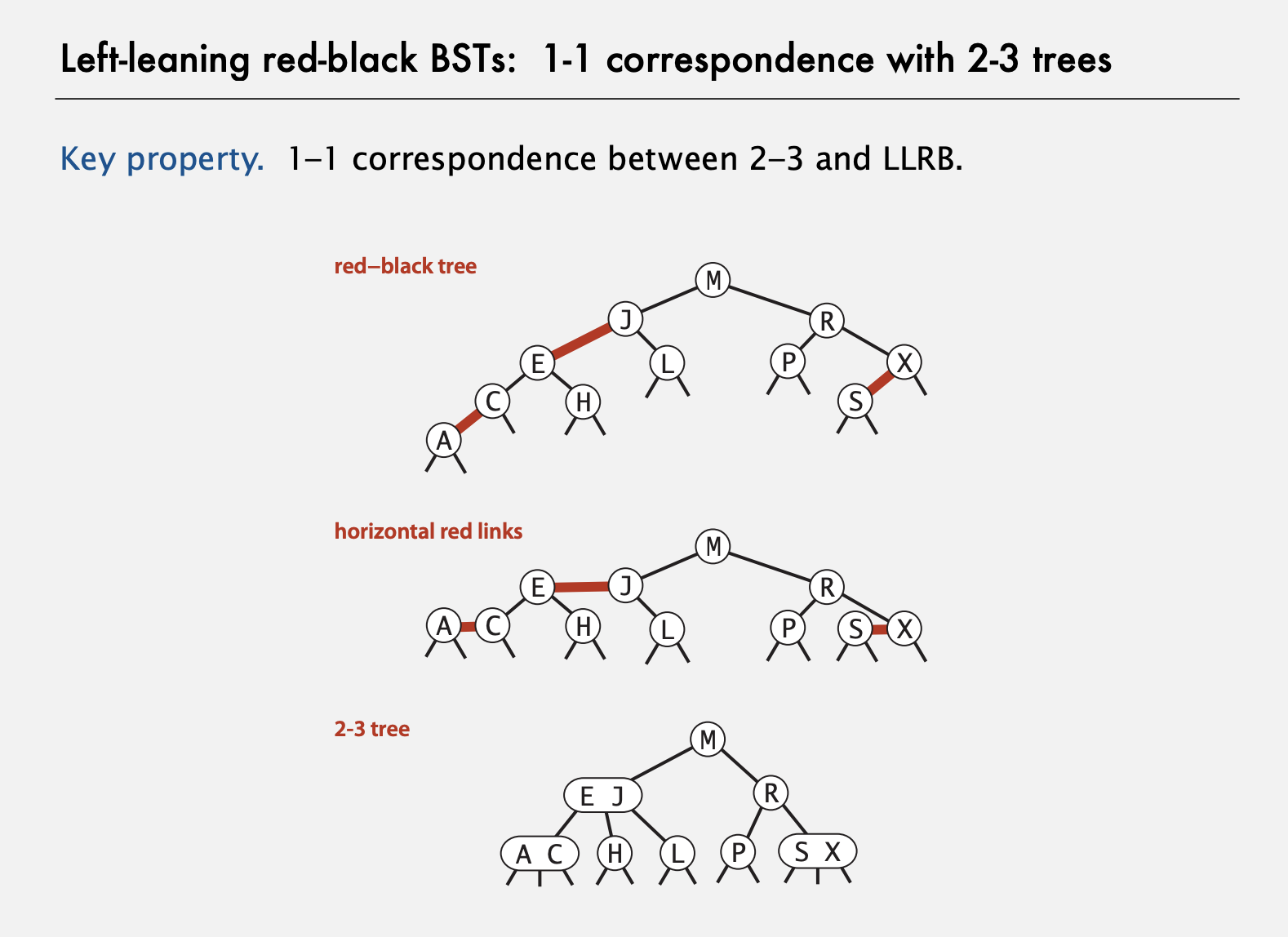

Left-leaning red-black BSTs: 1-1 correspondence with 2-3 trees

Hight Guarantee: if it is a red-black tree with n nodes, then it has \(height \leq 2 log_{2}(n + 1) \)

Every root to leaf path has the same number of black nodes

Sedgewick's friend wrote him a late night email telling him in this show they actually got the line for Red-Black tree correct. (And I don't think that helps with the ladies :) )

Click to see scripts...

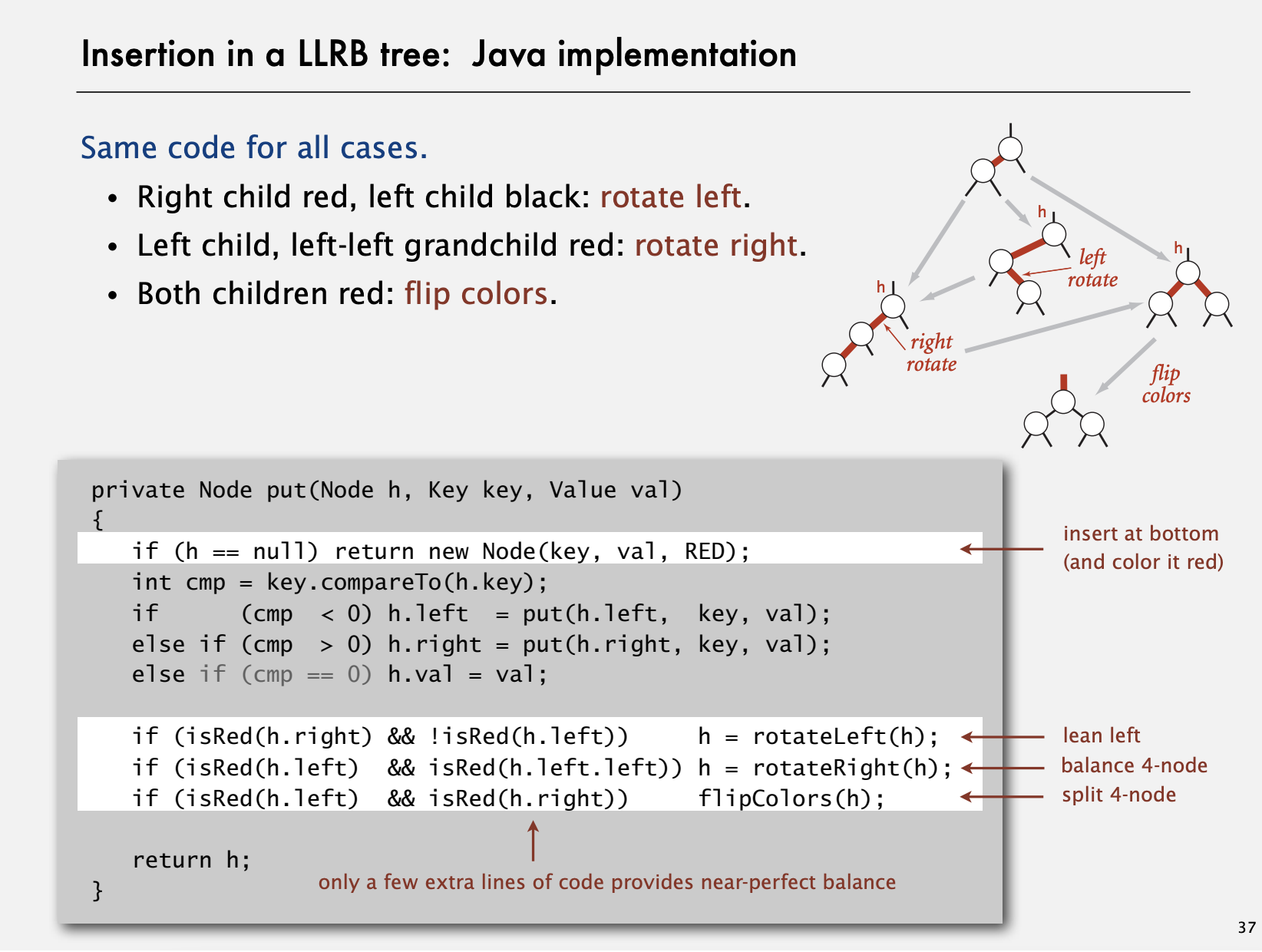

Achieved by 3 basic operations

- Left Rotation

- Right Rotation

- Flip Colors

B-Trees

Kd-Trees

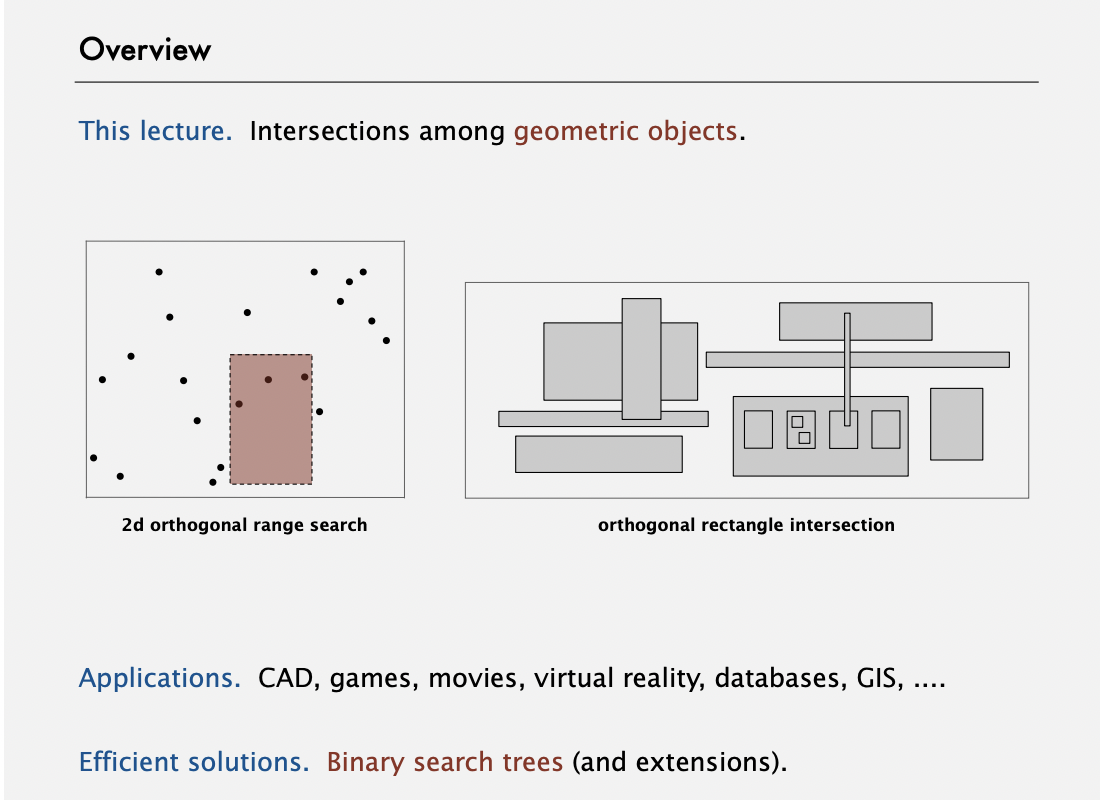

Geometric application of BSTs

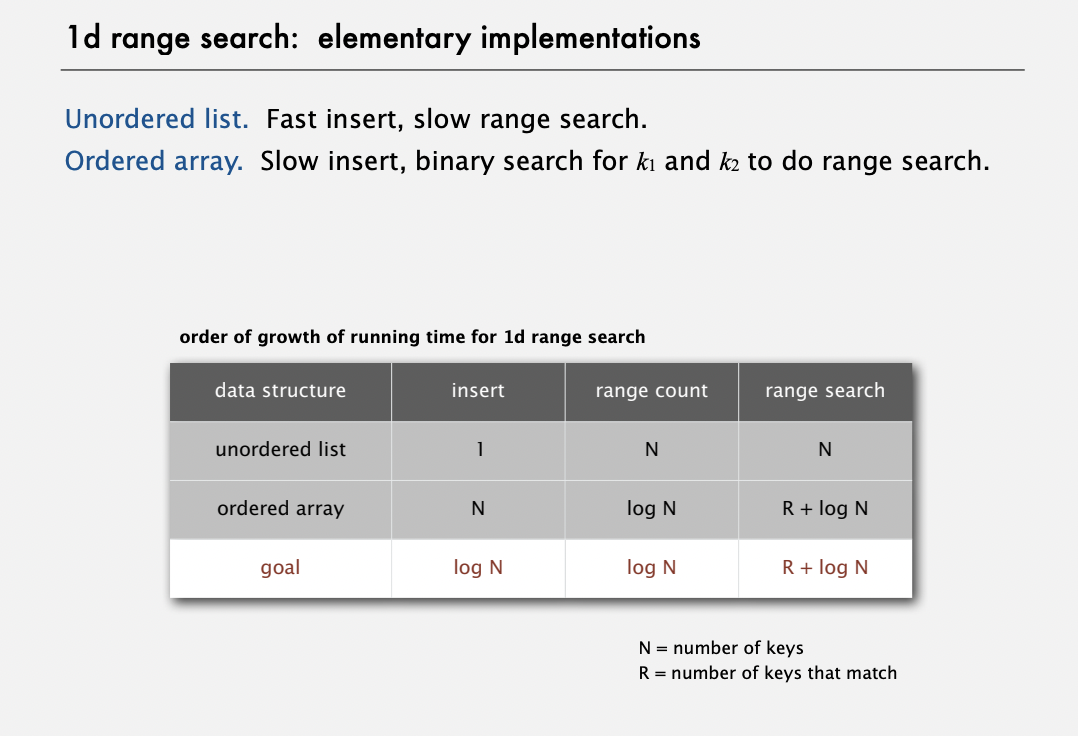

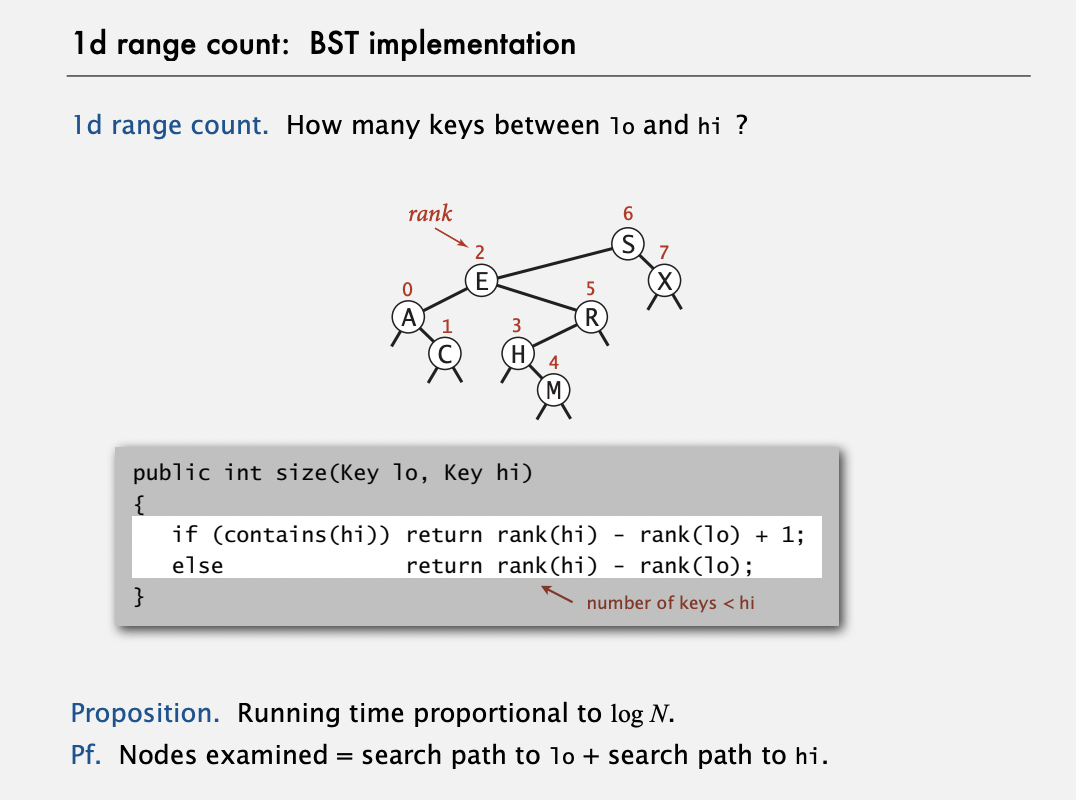

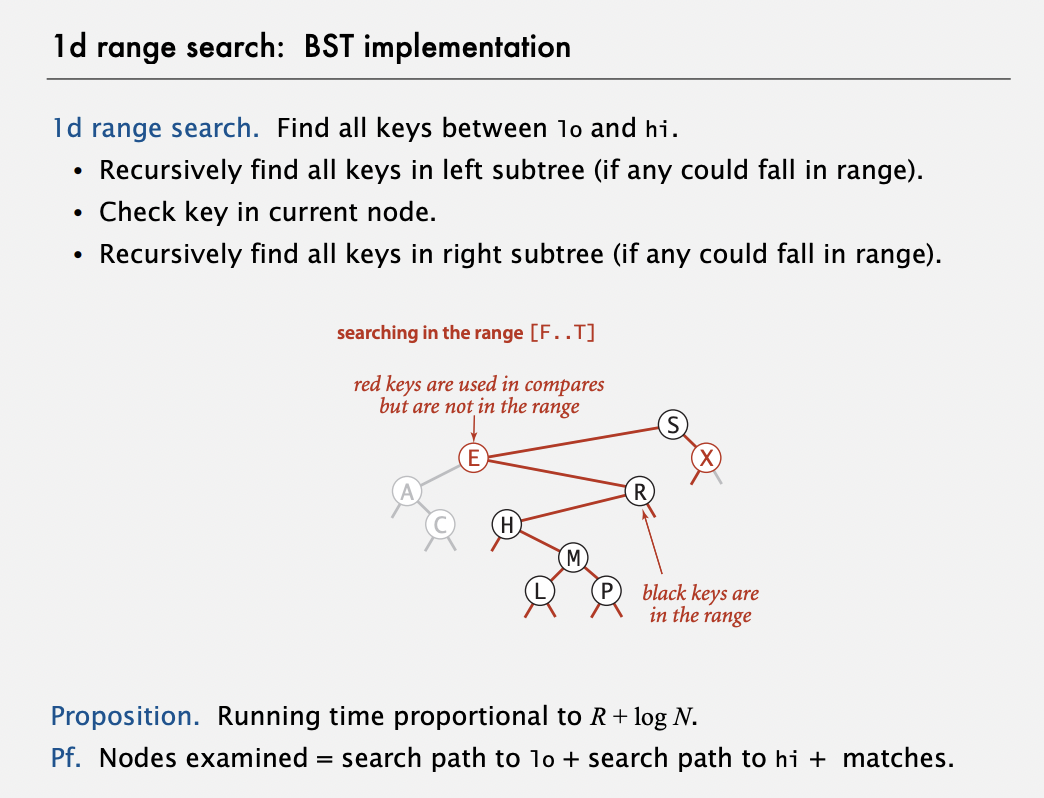

1d range search

1d range counton BST can be achieved with recursiverank()calls.

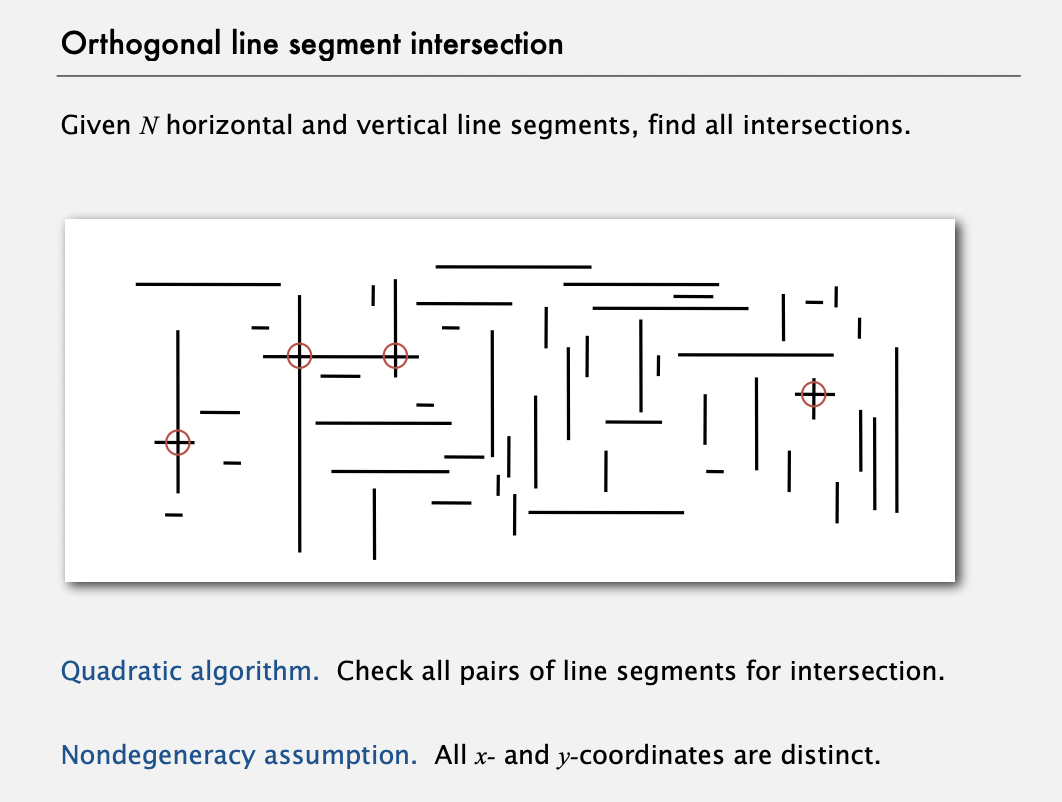

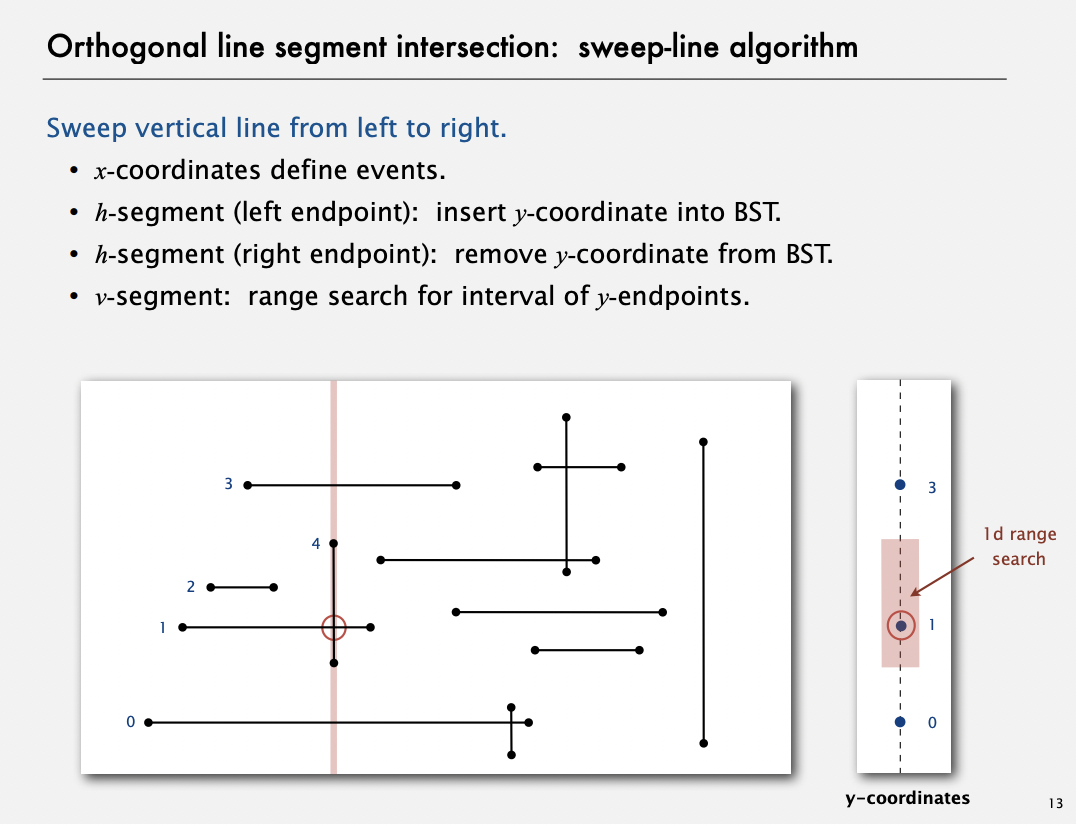

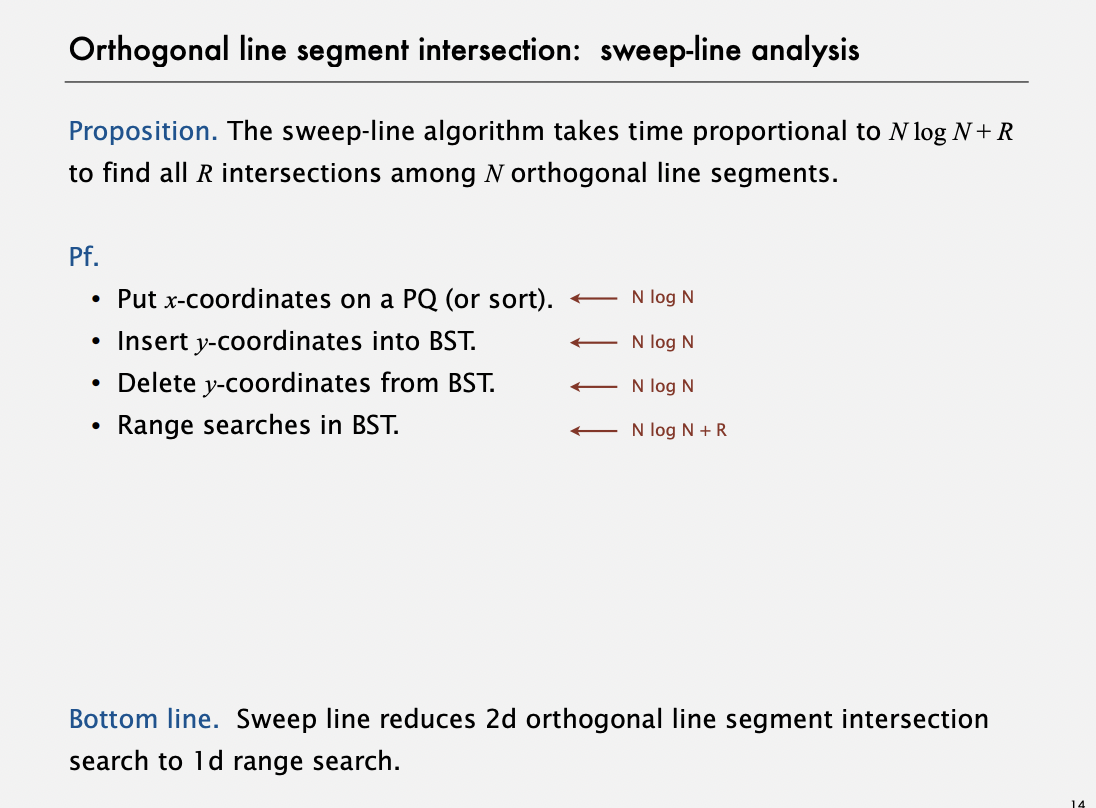

Orthogonal line segment intersection - sweep line algorithm

2-d orthogonal range search - Kd-trees

Kd tree: Recursively partition k-dimensional space into 2 halfspaces

Raft - learning from MIT 6.824

- Course info at https://pdos.csail.mit.edu/6.824/labs/lab-raft.html

- Paper at https://raft.github.io/raft.pdf

- A very good YouTube video

Raft Core Feature

If committed --meaning--> Present in the future leader's logs, and this is achieved by:

- Restrictions on leader election (See voting rules)

- Restrictions on commit

Leader Election

We firstly look at Leader Election mechanism - which is implemented by two parts:

RequestVote- each node start asFollowerand whenelection timeoutpasses and no HeartBeat received before, then a node step up asCandidateand send votes to rest of the nodes and if collect more than half (count itself as well), it steps up as aLeader, which keeps sendingHeartBeatto others.HeartBeat - a.k.a Empty AppendEntries RPC

Besides, the tips on the paper and the lab2a websites, I encountered a few other pitfalls (which might be mentioned somewhere in to docs)

Candidates

- In order to make the tests can pass with limited running time, I have to make sure when a candidate sends vote requests out and wait, it should stop waiting as long as it already got enough majority votes.

- The same applies when a leader is waiting for heatbeats. Don't wait after quorum votes have been fetched.

- Each places when a candidate/leader step down to a follower,

remember to set

votedForback tonilor-1so to allow it gives a valid vote in the next round of voting process. - Not like Follower, Candidates can probably send out the next round of vote

with a shorter and fixed Duration than

a Follower's

election timeout duration, in the case when this round of vote does not get enough votes; But at the same time

Getting vote request as Candidate

- It seems not necessary, but I also added the logic (seem to be safer): If myself is a Candidate and the other node (candidate) want me to vote to her, if we have the same term value, then I don't vote to her (so as she won't vote for me neither);

Standard Voting Rules when got Voting Request:

- If request term lass than my term, ignore. Reply my term.

- So when request term >= my term,

- From

Rules for Servers: If RPC request or response contains term T > currentTerm: set currentTerm = T, convert to follower - The candidate situation mentioned above

- if

notYetVotedorvotedTheSameBefore, then do the vote, setvotedForand reset myelection timeout; It is important to havevotedForset and checked here as it helps in the case where there is multiple candidates alive, then no multiple leaders are selected, as we make sure each voter can only vote to one candidate.

- From

- Restrictions on leader election

- When voting, include

lastLogIndexandlastLogTerm - Voting server

vdenies vote if its log is "more complete":if (lastTerm_v > lastTerm_c) || (lastTerm_v == lastTerm_c) && (lastIndex_v > lastIndex_c) return false

- When voting, include

HeartBeat - a.k.a Empty AppendEntries RPC

After the Leader is elected, the electionTimeoutTime or election timeout should then be

constantly refreshed with each HeartBeat - which is achieved by sending empty

AppendEntries RPC calls.

The basic rules are like:

- From

Rules for Servers: If RPC request or response contains term T > currentTerm: set currentTerm = T, convert to follower

The code could be something like this

func (rf *Raft) HeartBeat(args *HeartBeatRequest, reply *HeartBeatReply) {

rf.mu.Lock()

defer rf.mu.Unlock()

if args.Term >= rf.term {

rf.state = Follower // step down

rf.votedFor = args.From

rf.term = args.Term

rf.electionTimeoutTime = time.Now().Add(rf.getElectionTimeoutDuration())

reply.Good = true

reply.Term = rf.term

return

} else {

reply.Good = false

reply.Term = rf.term

return

}

}

AppendEntries RPC

Tricky part summary

Leader ticker and Candidate ticker

The candidate ticker can be done with just a single thread loop

and it is okay to set up a timeout as well. For the critical

conditions of TestFigure8Unreliable2C, it will mostly work

well as long as we make sure timeout is relatively large so

we can always have a candidate selected after a few rounds.

But for the leader ticker we cannot use single thread call with a long timeout anymore as if we do that, the timeout will be a time much longer than the election timeout of other followers then it ended up constantly new candidate showing up.

However, if we let leader ticker async, for example, start a goroutine and send a HeartBeat call every 100ms, then the other subtle issue is that we have to make sure, when those goroutines finishes in different time and order, we can still handle things correctly.

A few places to worth notice and mention:

- For each leader ticker goroutine, after getting reply from any followers, do a check of the leader's states and term as the leader might have already been converted to a follower by other leader

- when leader to update

nextIndexfor a follower, check it can only be larger thanmatchedIndexin case the HeartBeats to the same follower replied out of order.

Follower Log correction Speed-Up This is where the paper didn't say much, but basically there is two cases we need to speed-up follower log.

- During follower replying

HeartBeat, when follower'sLastLogTermis greater than leader'srf.log[nextIndex-1].Term(orargs.PrevLogTerm), so we rollnextIndexto the smaller side until it reaches an entry with the same term as leader'sPrevLogTerm, by cutting follower's log and replying to leader with a smallerLastLogIndex;

Also, when PrevLogIndex/Term matches, remove all the following entries that starts with a miss match of any entry term from leader's incoming entriesLeader: [0, 1, 1, 2, 2, 2, 4, 4,] Entries: ^ [2, 4, 4,] Follower: [0, 1, 1, 3, 3, ] then we should cut it to: [0, 1, 1, ] <-----------------------| ^-------new `nextIndex` found by `LastLogIndex`Leader: [0, 1, 1, 2, 2, 2, 4, 4,] Entries: ^ [2, 4, 4,] Follower: [0, 1, 1, 2, 2, 2, 3, 3, 3, 3] then we should cut it to: [0, 1, 1, 2, 2, 2, 4, 4,] <-----------------------| - During leader's handling of a follower's reply of

HeartBeat, where the follower'sLastLogTermis less than leader'srf.log[nextIndex-1].Term, so we rollnextIndexto the smaller side until it reaches an entry with the same term as follower'sLastLogTerm.Leader: [0, 1, 2, 2, 2, 2, 4, 4,] Entries: ^ [2, 4, 4,] Follower: [0, 1, 1, 1, 1, 1, 1, 1, ] then we should cut it to: [0, 1, 1, 1, ] <-----------------------------| ^--------------- and also send back `LastLogTerm` as 1 so to let leader set `nextIndex` to the place just after the last entry of term = LastLogTerm ^----------------- new `nextIndex` found by `LastLogTerm`

Async commit and updating rf.commitIndex

The actual apply of those logs and update of rf.commitIndex

can be done async with a single goroutine.

Important fix for TestFigure8Unreliable2C's commits not sync issue here - as we added some optimization on

- Leader only sends part of new entries when there are more than a max number (500 in this case) of new entries that are not on the follower

- When a Follower is handling the heartbeat, it does not touch the log if the incoming entries have been existing/duplicated, leaving some entries after the incoming entry part untouched/uncut as well. However, the entries in the latter part might not be valid.

So with those optimizations mentioned above, at the place when we update the follower's rf.commitIndex, we cannot go as far as the leader's commitIndex, but only goes to the end index of the incoming replicating entry parts - which is args.PrevLogIndex+len(args.Entries)

Or see some comments on this Lab2C PR

Cache

- Single Node

- LRU - least recently updated cache

- a map for keep values for search and delete

- a double-linked list as queue to remove least recently updated value

- LRU - least recently updated cache

- Distributed

- shard

- so to save more data in total

- each shard is on its own server

- one shard could be hot, and data can loss if one shard dies - solved by replication

- replication

- how to

- leader, follwer

- put/get to leader

- get to follwers

- leader sends data to follwer

- leader election methods

- using configuration service

- leader election in a shard group

- ruft, strong consistency

- gossip, enventual consistency

- leader, follwer

- adding availability

- okay to miss some set in corner cases, cache speed/latency is more important

- scaling out can also help solving hot shard issue

- how to

- consistent hashing

- multiple positions positions on circle for each nodes

- shard

- CAP

- Consistency (not favourd)

- Get on a replica after a set could be missed when data is replicating from leader to replicas async

- Cache servers might go down and up

- Availabilty (favoured)

- Consistency (not favourd)

- Data expiration

- during client fetch or via client expire call

- active with a vacuum/gc thread

- when # of keys are too large, use some probabilistic way to random check each key instand loop the key range

- Cache client

- all clients know about all cache servers and

should have the same list of servers

- Maintaining a list of cache server,

how?

- Use configuration management tools (e.g. Puppet) to deploy modified file to every service host

- Use a S3 file to share a config file

- Configuration service (e.g.

ZooKeeper, Redis Sentinel) -

discover cache host, monitoring

their health

- Each cache server connect and send heart beats

- Costly on build and maintain, but fully automize the list info update

- Another benefit

- Maintaining a list of cache server,

how?

- client store list of servers in sorted order by hash value (e.g. TreeMap)

- Binary search is used to identify server (log n)

- Use TCP or UDP protocol to talk to servers

- If server unavailable, client proceeds as though it was a cache miss

- all clients know about all cache servers and

should have the same list of servers

- Detailed topics

- Monitoring and logging

- Network IO

- QPS

- Miss rate

- security - should only exposed internally

- Monitoring and logging

- Questions

- I wonder how do you sync data into a newly started replica in a shard group. Simply copy all data in the leader's memory to the new replica node? Wouldn't that consumes quite a lot of the leader's CPU?

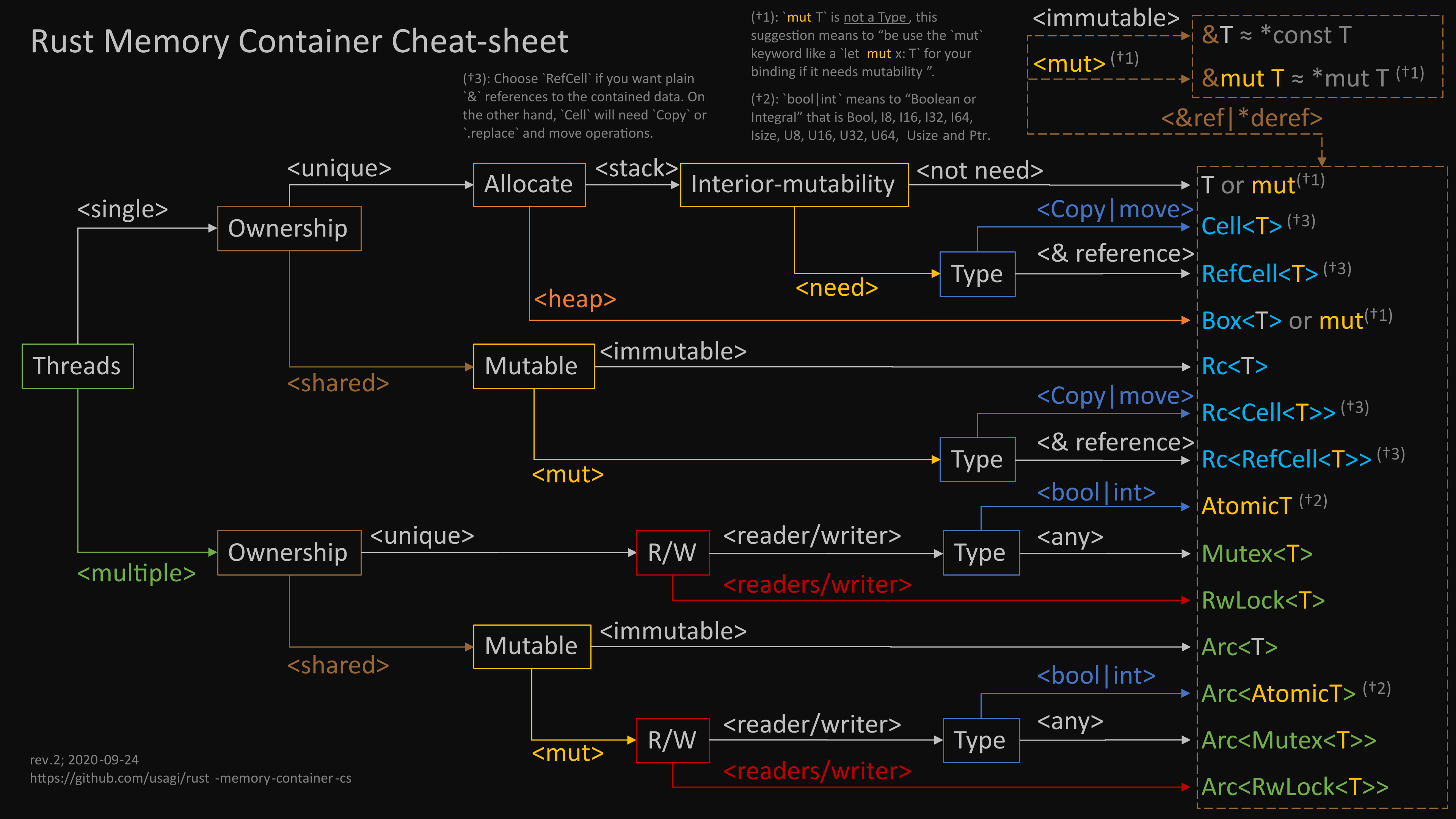

Rust note

C++ memory mode

Memory order

- Acquire / Release

- Sequentially Consistent (SeqCst)

- Relaxed

Acquire-Release

Sort of like mutex primitives, aquire=lock, release=unlock. Acquire and Release are largely intended to be paired.

-

releaseupdate the memory, "publish" to other threads, used only withstoretype operation(save/publish data out, cannot use with load) -

acquirememory "published" by other threads, making it available to us, used only withloadtype of operation (cannot use with store) -

For "load and store" type of operation:

releasewill make loadrelaxedand storereleaseacquirewill make loadacquireand storerelaxed

-

AcqRelor acquire and release also exist, so can be used for "load and store" type of operation, making loadacquireand storerelease(no relaxed). This operation order could be handy for situation such as for an Arc type of thing in the end to drop the internal object, it may use some operation likeload_and_minors_1_then_storeso you would use thisAcqRelto make sure the thread got the updated value and all other threads has updated value when they read. This is illustrated in the video.

Intuitively, an acquire access ensures that every access after it stays after it. However operations that occur before an acquire are free to be reordered to occur after it. Similarly, a release access ensures that every access before it stays before it. However operations that occur after a release are free to be reordered to occur before it.

When thread A releases a location in memory and then thread B subsequently acquires the same location in memory, causality is established. Every write (including non-atomic and relaxed atomic writes) that happened before A's release will be observed by B after its acquisition. However no causality is established with any other threads. Similarly, no causality is established if A and B access different locations in memory.

Or using the words from the video:

- acquire/release: no total order of events

- each thread has it view of consistent ordering

Basic use of release-acquire is therefore simple: you acquire a location of memory to begin the critical section, and then release that location to end it. For instance, a simple spinlock might look like:

use std::sync::Arc; use std::sync::atomic::{AtomicBool, Ordering}; use std::thread; fn main() { let lock = Arc::new(AtomicBool::new(false)); // value answers "am I locked?" // ... distribute lock to threads somehow ... // Try to acquire the lock by setting it to true while lock.compare_and_swap(false, true, Ordering::Acquire) { // c++ code here could be: std::this_threadd::yield() to let other thread run } // broke out of the loop, so we successfully acquired the lock! // ... scary data accesses, but safe to do stuff here ... // ok we're done, release the lock lock.store(false, Ordering::Release); }

Sequentially Consistent

Be cause there is no total order of events for acquire/release, Sequentially Consistent is introduced.

Sequentially Consistent is the most powerful of all, implying the restrictions of all other orderings. Intuitively, a sequentially consistent operation cannot be reordered: all accesses on one thread that happen before and after a SeqCst access stay before and after it. A data-race-free program that uses only sequentially consistent atomics and data accesses has the very nice property that there is a single global execution of the program's instructions that all threads agree on. This execution is also particularly nice to reason about: it's just an interleaving of each thread's individual executions. This does not hold if you start using the weaker atomic orderings.

The relative developer-friendliness of sequential consistency doesn't come for free. Even on strongly-ordered platforms sequential consistency involves emitting memory fences.

In practice, sequential consistency is rarely necessary for program correctness. However sequential consistency is definitely the right choice if you're not confident about the other memory orders. Having your program run a bit slower than it needs to is certainly better than it running incorrectly! It's also mechanically trivial to downgrade atomic operations to have a weaker consistency later on. Just change SeqCst to Relaxed and you're done! Of course, proving that this transformation is correct is a whole other matter.

Relaxed

Relaxed accesses are the absolute weakest. They can be freely re-ordered and provide no happens-before relationship. Still, relaxed operations are still atomic. That is, they don't count as data accesses and any read-modify-write operations done to them occur atomically. Relaxed operations are appropriate for things that you definitely want to happen, but don't particularly otherwise care about. For instance, incrementing a counter can be safely done by multiple threads using a relaxed fetch_add if you're not using the counter to synchronize any other accesses.

There's rarely a benefit in making an operation relaxed on strongly-ordered platforms, since they usually provide release-acquire semantics anyway. However relaxed operations can be cheaper on weakly-ordered platforms.

Pattern matching

Patterns come in two forms: refutable and irrefutable.

Patterns that will match for any possible value passed are irrefutable. An example would be x in the statement let x = 5; because x matches anything and therefore cannot fail to match.

(Meaning it always will have a match.)

Patterns that can fail to match for some possible value are refutable. An example would be Some(x) in the expression if let Some(x) = a_value because if the value in the a_value variable is None rather than Some, the Some(x) pattern will not match.

Pattern matching type

let- only irrefutable- fn param, closure - only irrefutable

matchexp - accept refutable and irrefutableif letexp - accept refutable and irrefutablewhile letexp - accept refutable and irrefutableforexp - only irrefutable

let

#![allow(unused)] fn main() { struct Point {x: isize, y: isize}; let (a, b) = (1, 2); let Point {x, y} = Point {x:3, y:4}; assert_eq!(3, x); assert_eq!(4, y); }

No need to write ref?

Compiler helps you adding ref when matching references with

non-references like expressions.

#![allow(unused)] fn main() { let x: Option<String> = Some("hello".into()); match &x { Some(s) => println!("{}", s), // nothing moves here, `s` is a &String, // the same as `Some(ref s) => ...`, with `ref` added by compiler behind the scene None => println!("nothing") } println!("{}", x.unwrap()); // x still owns the String }

Smart pointers

Smart pointers allow you to store data on the heap rather than the stack. What remains on the stack is the pointer to the heap data.

Box<T>for allocating values on the heapRc<T>, a reference counting type that enables multiple ownershipRef<T>andRefMut<T>, accessed throughRefCell<T>, a type that enforces the borrowing rules at runtime instead of compile time

#![allow(unused)] fn main() { let s_on_stack: Box<&str> = Box::new("hello"); // string is still on stack let s_on_heap: Box<str> = Box::from("hello"); // string is copied onto heap println!("s_on_stack = {}", s_on_stack); println!("s_on_heap = {}", s_on_heap); }

Using Deref trait to auto dereference

use std::ops::Deref; struct MySmartPointer<T>{hold: T} impl<T> MySmartPointer<T> { fn new(hold: T) -> MySmartPointer<T> { MySmartPointer{hold} } } impl<T> Deref for MySmartPointer<T> { type Target = T; fn deref(&self) -> &T { &self.hold } } // using it struct User { name: &'static str } impl User { fn print_name(&self) { println!("My name is {}", self.name); } } fn main() { let user_pointer = MySmartPointer::new(User {name: "Alex"}); user_pointer.print_name(); // auto deref }

Using Box for unknown size type

#[derive(Debug)] enum List { Cons(i32, List), Nil, } use crate::List::{Cons, Nil}; fn main() { let list = Cons(1, Cons(2, Cons(3, Nil))); println!("{:?}", list); // recursive type has infinite size, won't compile }

Above code won't compile as Rust cannot know the size of a List as it is recursive. Now we can use Box<> to make it's size known at compile time.

#[derive(Debug)] enum List { Cons(i32, Box<List>), Nil, } use crate::List::{Cons, Nil}; fn main() { let list = Cons(1, Box::new(Cons(2, Box::new(Cons(3, Box::new(Nil)))))); println!("{:?}", list); }

String

char

Actually a 4-byte fixed size or u32 type of value.

#![allow(unused)] fn main() { let tao: char = '道'; println!("'道' as u32: {}", tao as u32); // 36947 char is basically u32 size unicode println!("U+{:x}", tao as u32); // U+9053 - output in 0x or hexadecimal(16) format println!("{}", tao.escape_unicode()); // \u{9053} println!("{}", char::from(65)); // from a u8 -> 'A' println!("{}", std::char::from_u32(0x9053).unwrap()); // from a u32 println!("{}", std::char::from_u32(36947).unwrap()); // from a u32 println!("{}", std::char::from_u32(1234567).unwrap_or('_')); // not every u32 is a char // noticing a char uses 4-byte in memory but not all the space is always used assert_eq!(3, tao.len_utf8()); // effective data length in byte assert_eq!(4, std::mem::size_of_val(&tao)); }

String

String is basically a Vec<u8>.

Other type:

Cstr/CstringOsStr/OsStringPath/PathBuf

#![allow(unused)] fn main() { let tao = std::str::from_utf8(&[0xe9u8, 0x81u8, 0x93u8]).unwrap(); println!("{}", tao); let tao = String::from("\u{9053}"); println!("{}", tao); }

A char like String might not be a char

A single "char" looking thing - like ❤️ - doesn't means it is a valid char. Some of those single looking characters needs more than

one char or code points to be represented:

#![allow(unused)] fn main() { assert_eq!(6, String::from("❤️").len()); // length in byte assert_eq!(6, std::mem::size_of_val(String::from("❤️").as_str())); // same calculation as above assert_eq!(2, String::from("❤️").chars().count()); // how many `code points` - is 2? // as ❤️ takes 2 code points , we can't assign it to a char // let heart = '❤️'; // This won't work assert_eq!(1, String::from("道").chars().count()); // 道 can be defined as a char as it only has 1 code point assert_eq!('道', String::from("道").chars().next().unwrap()); assert_eq!(3, String::from("道").len()); assert_eq!(3, std::mem::size_of_val(String::from("道").as_str())); }

'é' is not 'é'

As always, remember that a human intuition for 'character' may not map to Unicode's definitions. For example, despite looking similar, the 'é' character is one Unicode code point while 'é' is two Unicode code points:

#![allow(unused)] fn main() { assert_eq!(1, String::from("é").chars().count()); // '\u{00e9}' -> latin small letter e with acute assert_eq!(2, String::from("é").chars().count()); // '\u{0065}' + '\u{0301}' -> U+0065: 'latin small letter e', U+0301: 'combining acute accent' // They look the same in editor but have different code points ?! }

Note for tokio dev

Frequently used command:

# Run a single integration test

cargo test --test tcp_into_std

# Run a single doc test

cargo test --doc net::tcp::stream::TcpStream::into_std

# Format code

rustfmt --edition 2018 $(find . -name '*.rs' -print)

SpinLock implementation

Naive unsafe implementation

#![allow(unused)] fn main() { use std::sync::atomic::AtomicBool; use std::sync::atomic::Ordering::{Acquire, Release}; use std::cell::UnsafeCell; pub struct SpinLockUnsafe<T> { locked: AtomicBool, value: UnsafeCell<T>, } unsafe impl<T> Sync for SpinLockUnsafe<T> where T: Send {} impl<T> SpinLockUnsafe<T> { pub const fn new(value: T) -> Self { Self { locked: AtomicBool::new(false), value: UnsafeCell::new(value), } } pub fn lock(&self) -> &mut T { while self.locked.swap(true, Acquire) { std::hint::spin_loop(); } unsafe { &mut *self.value.get() } } /// Safety: The &mut T from lock() must be gone! /// (And no cheating by keeping reference to fields of that T around!) pub unsafe fn unlock(&self) { self.locked.store(false, Release); } } }

Better safe version and much easier to use

With the help of a Guard trick we let unlock be triggered during a drop on a Guard.

Interesting pattern.

#![allow(unused)] fn main() { use std::sync::atomic::AtomicBool; use std::sync::atomic::Ordering::{Acquire, Release}; use std::cell::UnsafeCell; use std::ops::{Deref, DerefMut}; /// To be able to provide a fully safe interface, we need to tie the unlocking operation to /// the end of the &mut T. We can do that by wrapping this reference in our own type that /// behaves like a reference, but also implements the Drop trait to do something when it is dropped. /// /// Such a type is often called a guard, as it effectively guards the state of the lock, /// and stays responsible for that state until it is dropped. pub struct Guard<'a, T> { lock: &'a SpinLock<T>, } pub struct SpinLock<T> { locked: AtomicBool, value: UnsafeCell<T>, } unsafe impl<T> Sync for SpinLock<T> where T: Send {} impl<T> SpinLock<T> { pub const fn new(value: T) -> Self { Self { locked: AtomicBool::new(false), value: UnsafeCell::new(value), } } pub fn lock(&self) -> Guard<T> { while self.locked.swap(true, Acquire) { std::hint::spin_loop(); } Guard { lock: self } } } impl<T> Deref for Guard<'_, T> { type Target = T; fn deref(&self) -> &T { // Safety: The very existence of this Guard // guarantees we've exclusively locked the lock. unsafe { &*self.lock.value.get() } } } impl<T> DerefMut for Guard<'_, T> { fn deref_mut(&mut self) -> &mut T { // Safety: The very existence of this Guard // guarantees we've exclusively locked the lock. unsafe { &mut *self.lock.value.get() } } } impl<T> Drop for Guard<'_, T> { fn drop(&mut self) { self.lock.locked.store(false, Release); } } }

Resources

Good posts and links

- Rust: A unique perspective - Matt Brubeck

- What Are Tokio and Async IO All About? - Manish Goregaokar

- Async: What is blocking? - Alice Ryhl

Network Programming

http://beej.us/guide/bgnet/html/

Two Types of Internet Sockets

SOCK_STREAM-> TCPSOCK_DGRAM-> UDP, may lost, may out of order, but fast

Layered Network Model (Data Encapsulation)

- Application Layer (telnet, ftp, etc.)

- Host-to-Host Transport Layer (TCP, UDP) - port number (16-bit number)

- Internet Layer (IP and routing)

- Network Access Layer (Ethernet, wi-fi, or whatever)

See how much work there is in building a simple packet? All you have to do for stream sockets is send() the data out.

All you have to do for datagram sockets is encapsulate the packet in the method of your choosing and sendto() it out. The kernel builds the Transport Layer and Internet Layer on for you and the hardware does the Network Access Layer. Ah, modern technology.

System calls

bind() - What port am I on?

struct addrinfo hints, *res;

int sockfd;

// first, load up address structs with getaddrinfo():

memset(&hints, 0, sizeof hints);

hints.ai_family = AF_UNSPEC; // use IPv4 or IPv6, whichever

hints.ai_socktype = SOCK_STREAM;

hints.ai_flags = AI_PASSIVE; // fill in my IP for me

getaddrinfo(NULL, "3490", &hints, &res);

// make a socket:

sockfd = socket(res->ai_family, res->ai_socktype, res->ai_protocol);

// bind it to the port we passed in to getaddrinfo():

bind(sockfd, res->ai_addr, res->ai_addrlen);

connect() - Hey, you!

struct addrinfo hints, *res;

int sockfd;

// first, load up address structs with getaddrinfo():

memset(&hints, 0, sizeof hints);

hints.ai_family = AF_UNSPEC;

hints.ai_socktype = SOCK_STREAM;

getaddrinfo("www.example.com", "3490", &hints, &res);

// make a socket:

sockfd = socket(res->ai_family, res->ai_socktype, res->ai_protocol);

// connect!

connect(sockfd, res->ai_addr, res->ai_addrlen);

Also, notice that we didn’t call bind(). Basically, we don’t care about our local port number; we only care where we’re going (the remote port). The kernel will choose a local port for us, and the site we connect to will automatically get this information from us. No worries.

listen() - Keep on answering stuff

int listen(int sockfd, int backlog);

sockfd is the usual socket file descriptor from the socket() system call. backlog is the number of connections allowed on the incoming queue. What does that mean? Well, incoming connections are going to wait in this queue until you accept() them and this is the limit on how many can queue up. Most systems silently limit this number to about 20; you can probably get away with setting it to 5 or 10.

Well, as you can probably imagine, we need to call bind() before we call listen() so that the server is running on a specific port.

accept() - "Thank you for calling port 3490."

#include <sys/types.h>

#include <sys/socket.h>

int accept(int sockfd, struct sockaddr *addr, socklen_t *addrlen);

You call accept() and you tell it to get the pending connection. It’ll return to you a brand new socket file descriptor to use for this single connection! That’s right, suddenly you have two socket file descriptors for the price of one! The original one is still listening for more new connections, and the newly created one is finally ready to send() and recv()

send() and recv() - Talk to me, baby!

For UDP - sendto() and recvfrom().

int send(int sockfd, const void *msg, int len, int flags);

Example:

char *msg = "Beej was here!";

int len, bytes_sent;

.

.

len = strlen(msg);

bytes_sent = send(sockfd, msg, len, 0);

.

.

Remember, if the value returned by send() doesn’t match the value in len, it’s up to you to send the rest of the string. The good news is this: if the packet is small (less than 1K or so) it will probably manage to send the whole thing all in one go.

The recv() call is similar in many respects:

int recv(int sockfd, void *buf, int len, int flags);

recv() returns the number of bytes actually read into the buffer, or -1 on error (with errno set, accordingly).

Wait! recv() can return 0. This can mean only one thing: the remote side has closed the connection on you! A return value of 0 is recv()’s way of letting you know this has occurred.

int sendto(int sockfd, const void *msg, int len, unsigned int flags, const struct sockaddr *to, socklen_t tolen);

int recvfrom(int sockfd, void *buf, int len, unsigned int flags,struct sockaddr *from, int *fromlen);

close() and shutdown() —Get outta my face!

Examples!!!

/*

** server.c -- a stream socket server demo

*/

#include <stdio.h>

#include <stdlib.h>

#include <unistd.h>

#include <errno.h>

#include <string.h>

#include <sys/types.h>

#include <sys/socket.h>

#include <netinet/in.h>

#include <netdb.h>

#include <arpa/inet.h>

#include <sys/wait.h>

#include <signal.h>

#define PORT "3490" // the port users will be connecting to

#define BACKLOG 10 // how many pending connections queue will hold

void sigchld_handler(int s)

{

// waitpid() might overwrite errno, so we save and restore it:

int saved_errno = errno;

while(waitpid(-1, NULL, WNOHANG) > 0);

errno = saved_errno;

}

// get sockaddr, IPv4 or IPv6:

void *get_in_addr(struct sockaddr *sa)

{

if (sa->sa_family == AF_INET) {

return &(((struct sockaddr_in*)sa)->sin_addr);

}

return &(((struct sockaddr_in6*)sa)->sin6_addr);

}

int main(void)

{

int sockfd, new_fd; // listen on sock_fd, new connection on new_fd

struct addrinfo hints, *servinfo, *p;

struct sockaddr_storage their_addr; // connector's address information

socklen_t sin_size;

struct sigaction sa;

int yes=1;

char s[INET6_ADDRSTRLEN];

int rv;

memset(&hints, 0, sizeof hints);

hints.ai_family = AF_UNSPEC;

hints.ai_socktype = SOCK_STREAM;

hints.ai_flags = AI_PASSIVE; // use my IP

if ((rv = getaddrinfo(NULL, PORT, &hints, &servinfo)) != 0) {

fprintf(stderr, "getaddrinfo: %s\n", gai_strerror(rv));

return 1;

}

// loop through all the results and bind to the first we can

for(p = servinfo; p != NULL; p = p->ai_next) {

if ((sockfd = socket(p->ai_family, p->ai_socktype,

p->ai_protocol)) == -1) {

perror("server: socket");

continue;

}

if (setsockopt(sockfd, SOL_SOCKET, SO_REUSEADDR, &yes,

sizeof(int)) == -1) {

perror("setsockopt");

exit(1);

}

if (bind(sockfd, p->ai_addr, p->ai_addrlen) == -1) {

close(sockfd);

perror("server: bind");

continue;

}

break;

}

freeaddrinfo(servinfo); // all done with this structure

if (p == NULL) {

fprintf(stderr, "server: failed to bind\n");

exit(1);

}

if (listen(sockfd, BACKLOG) == -1) {

perror("listen");

exit(1);

}

sa.sa_handler = sigchld_handler; // reap all dead processes

sigemptyset(&sa.sa_mask);

sa.sa_flags = SA_RESTART;

if (sigaction(SIGCHLD, &sa, NULL) == -1) {

perror("sigaction");

exit(1);

}

printf("server: waiting for connections...\n");

while(1) { // main accept() loop

sin_size = sizeof their_addr;

new_fd = accept(sockfd, (struct sockaddr *)&their_addr, &sin_size);

if (new_fd == -1) {

perror("accept");

continue;

}

inet_ntop(their_addr.ss_family,

get_in_addr((struct sockaddr *)&their_addr),

s, sizeof s);

printf("server: got connection from %s\n", s);

if (!fork()) { // this is the child process

close(sockfd); // child doesn't need the listener

if (send(new_fd, "Hello, world!", 13, 0) == -1)

perror("send");

close(new_fd);

exit(0);

}

close(new_fd); // parent doesn't need this

}

return 0;

}

/*

** client.c -- a stream socket client demo

*/

#include <stdio.h>

#include <stdlib.h>

#include <unistd.h>

#include <errno.h>

#include <string.h>

#include <netdb.h>

#include <sys/types.h>

#include <netinet/in.h>

#include <sys/socket.h>

#include <arpa/inet.h>

#define PORT "3490" // the port client will be connecting to

#define MAXDATASIZE 100 // max number of bytes we can get at once

// get sockaddr, IPv4 or IPv6:

void *get_in_addr(struct sockaddr *sa)

{

if (sa->sa_family == AF_INET) {

return &(((struct sockaddr_in*)sa)->sin_addr);

}

return &(((struct sockaddr_in6*)sa)->sin6_addr);

}

int main(int argc, char *argv[])

{

int sockfd, numbytes;

char buf[MAXDATASIZE];

struct addrinfo hints, *servinfo, *p;

int rv;

char s[INET6_ADDRSTRLEN];

if (argc != 2) {

fprintf(stderr,"usage: client hostname\n");

exit(1);

}

memset(&hints, 0, sizeof hints);

hints.ai_family = AF_UNSPEC;

hints.ai_socktype = SOCK_STREAM;

if ((rv = getaddrinfo(argv[1], PORT, &hints, &servinfo)) != 0) {

fprintf(stderr, "getaddrinfo: %s\n", gai_strerror(rv));

return 1;

}

// loop through all the results and connect to the first we can

for(p = servinfo; p != NULL; p = p->ai_next) {

if ((sockfd = socket(p->ai_family, p->ai_socktype,

p->ai_protocol)) == -1) {

perror("client: socket");

continue;

}

if (connect(sockfd, p->ai_addr, p->ai_addrlen) == -1) {

close(sockfd);

perror("client: connect");

continue;

}

break;

}

if (p == NULL) {

fprintf(stderr, "client: failed to connect\n");

return 2;

}

inet_ntop(p->ai_family, get_in_addr((struct sockaddr *)p->ai_addr),

s, sizeof s);

printf("client: connecting to %s\n", s);

freeaddrinfo(servinfo); // all done with this structure

if ((numbytes = recv(sockfd, buf, MAXDATASIZE-1, 0)) == -1) {

perror("recv");

exit(1);

}

buf[numbytes] = '\0';

printf("client: received '%s'\n",buf);

close(sockfd);

return 0;

}

Blocking

Lots of functions block. accept() blocks. All the recv() functions block. The reason they can do this is because they’re allowed to. When you first create the socket descriptor with socket(), the kernel sets it to blocking. If you don’t want a socket to be blocking, you have to make a call to fcntl():

#include <unistd.h>

#include <fcntl.h>

.

.

sockfd = socket(PF_INET, SOCK_STREAM, 0);

fcntl(sockfd, F_SETFL, O_NONBLOCK);

.

.

By setting a socket to non-blocking, you can effectively “poll” the socket for information. If you try to read from a non-blocking socket and there’s no data there, it’s not allowed to block — it will return -1 and errno will be set to EAGAIN or EWOULDBLOCK.

(Wait—it can return EAGAIN or EWOULDBLOCK? Which do you check for? The specification doesn’t actually specify which your system will return, so for portability, check them both.)

Generally speaking, however, this type of polling is a bad idea. If you put your program in a busy-wait looking for data on the socket, you’ll suck up CPU time like it was going out of style. A more elegant solution for checking to see if there’s data waiting to be read comes in the following section on poll().

poll() — Synchronous I/O Multiplexing

What you really want to be able to do is somehow monitor a bunch of sockets at once and then handle the ones that have data ready. This way you don’t have to continously poll all those sockets to see which are ready to read.